Network meta-analysis (NMA) is an extension of meta-analysis, combining trial evidence for more than 2 treatments or other interventions. This type of…

Consumer-Contested Evidence: Why the ME/CFS Exercise Dispute Matters So Much

Sometimes, a dispute with a consumer movement comes along that has profound implications for far more than the people in it. I think the dramatic clash between the ME/CFS patient community and a power base in the evidence community is one of those. It points to weaknesses in research methodology and practice that don’t get enough critical attention. It raises uncomfortable questions about the relationship between researchers and policy communities. And it pushes the envelope on open data in clinical trials, too.

All of that underscores the importance of consumer critique of research. Yet the case also shows how conditional researcher acceptance of consumers can be.

ME/CFS is Myalgic Encephalomyelitis (ME)/Chronic Fatigue Syndrome (CFS), a serious, debilitating condition with a complex of symptoms, and a lot of unknowns. The argument about exercise is rooted in competing views about the condition itself.

A biopsychosocial camp has contended since the 1990s that regardless of how it starts, people with ME/CFS dig themselves into a hole with their attitudes and behaviors, leading to physical deconditioning (sort of the opposite of getting fit). They argue that people can dig themselves out of it by changing their beliefs and behaviors with the help of cognitive behavioral therapy (CBT) and graded exercise therapy (GET).

One of the key people from this camp is Peter White, who explained GET this way with Lucy Clark back in 2005:

A vicious circle of increased exercise avoidance and symptoms occurs, which serves to perpetuate fatigue, and therefore CFS….Patients can be released from their self-perpetuating cycle of inactivity if the impairments that occur due to inactivity and their physiological deconditioning can be reversed. This can occur if they are willing to gradually exceed their perceived energy limits, and recondition their bodies through GET.

ME/CFS groups see it differently. For them, the condition is more complex than fatigue and fitness, and believing you can recover won’t overcome it. From their experience and surveys since 2001, GET in practice causes relapses, worsening symptoms for most people; CBT doesn’t change the condition’s symptoms; and self-management “pacing” does help (managing being as active as possible while minimizing relapses).

By the early 2000s, the biopsychosocial camp could claim a few small trials in support of their position, but it was a very weak evidence base. They saw their treatment approach as a good news story for patients, though, and they had a lot of supporters. Exercise is something of a sacred cow to many – including in the evidence community. So there was a constituency that wasn’t going to be as critical of studies claiming advantages of exercise as they might be for other treatments.

There was another powerful camp that was attracted to the idea that cheap short interventions could get rid of ME/CFS, if only the person was willing to make an effort: insurance and welfare stakeholders. It was in their interests to reduce treatment expenditure and income dependency for this fairly common condition. The close associations disclosed by ME/CFS researchers in the biopsychosocial camp with these policy communities have the potential to be conflicts of interest – and they are certainly seen that way by many.

For people with ME/CFS, as George Faulkner explained, “unreasonable expectations of recovery” based on weak research was “presenting policies which reduce their options and income as benevolent and empowering interventions”. [PDF] Yet, even the most optimistic estimates show the overwhelming majority don’t benefit. And believing ME/CFS is a condition that can be cured by attitude and effort is stigmatizing, no matter how carefully you try to frame it. That’s harmful, for individuals who blame themselves (or get blamed) for their suffering, and for the collective of people deeply affected by a condition in effect tagged as “all in the mind”.

A small evidence base means the pool of researchers focusing on the topic is small, and the field can be relatively under-developed. White’s group, who were convinced CBT and GET were beneficial, had tried, unsuccessfully, to get a UK trial of the 2 therapies funded in 1998. That same year, the UK’s Chief Medical Officer established a working group to advise on the ME/CFS. Its report in 2002 was followed by the establishment of a Medical Research Council (MRC) research advisory group that recommended larger treatment trials – and stressed the importance of consumer involvement.

White’s group then brought the consumer group, Action for ME, on board, adding arms to study pacing and specialist medical care alone, plus a greater focus on adverse effects. (This development is described by White’s group here, and by Action for ME here.) The alliance wouldn’t survive the coming storm.

White and colleagues’ proposal became the PACE trial in 2005, after the MRC awarded it the biggest grant ever for an ME/CFS trial. Ultimately, the MRC contributed £2,779,361 (US$3.6 million), a substantial chunk of which came from the Scottish Government’s Chief Scientist Office, the National Institute for Health Research (NIHR), the Department of Health, and the Department for Work and Pensions. Previous trials each had at most about 40 participants comparing 2 treatments. The PACE trial was shooting for more than 600 people who weren’t severely affected, comparing 4 options. So right or wrong, that trial’s result was going to dominate the evidence base.

The academics responsible for the trial were Peter White, Trudie Chalder, and Michael Sharpe. In 1993, Chalder was the lead author of the trial’s primary outcome measure tool – the Chalder Fatigue Scale, [PDF] and in 1991, Sharpe was the lead author of “the Oxford criteria” used to diagnose who was eligible for the trial.

Let’s stop right there. Those 2 research instruments are pivotal for this trial. And the intellectual investment in them by these co-authors doomed this trial before the first participant was ever recruited. Why?

To rely on the trial’s results for decision making in ME/CFS, for starters you would need to know that the people in it really had ME/CFS. The Oxford criteria for ME/CFS used to choose who was included can’t deliver that reliably. This is what the US Agency for Healthcare Research and Quality (AHRQ) concluded in a systematic review of diagnosis and treatment for ME/CFS in 2014, several years after the PACE trial:

None of the current diagnostic methods have been adequately tested to identify patients with ME/CFS when diagnostic uncertainty exists.

AHRQ dug into this further in 2016, after an NIH meeting agreed with them, concluding:

The Oxford (Sharpe, 1991) case definition is the least specific of the definitions and less generalizable to the broader population of patients with ME/CFS. It could identify individuals who have had 6 months of unexplained fatigue with physical and mental impairment, but no other specific features of ME/CFS such as post-exertional malaise which is considered by many to be a hallmark symptom of the disease. As a result, using the Oxford case definition results in a high risk of including patients who may have an alternate fatiguing illness or whose illness resolves spontaneously with time. In light of this, we recommended in our report that future intervention studies use a single agreed upon case definition, other than the Oxford (Sharpe, 1991) case definition. If a single definition could not be agreed upon, future research should retire the use of the Oxford (Sharpe, 1991) case definition. The National Institute of Health (NIH) panel assembled to review evidence presented at the NIH Pathways to Prevention Workshop agreed with our recommendation, stating that the continued use of the Oxford (Sharpe, 1991) case definition “may impair progress and cause harm.”

When they analyzed what this means for the evidence on treatment for people with ME/CFS, it’s devastating:

Blatantly missing from this body of literature are trials evaluating effectiveness of interventions in the treatment of individuals meeting case definitions for ME or ME/CFS.

AHRQ argued that you need specific ME/CFS symptoms in your criteria – like experiencing post-exertional malaise. The PACE trial measured that specific symptom, because it was a secondary outcome. So how many of the trial participants had it before the trial treatments started? The baseline data show it ranged from only 82% in the GET group to 87% in the treatment-as-usual group. That underscores AHRQ’s point about the risk of the Oxford criteria, doesn’t it?

The second blow in this one-two punch is the validity of the Chalder fatigue scale, which they used as a primary outcome. It’s a patient-reported outcome measure (PRO or PROM) – a questionnaire for participants. In 2011, a guideline for how to reliably assess the validity of PROMs was released, called COSMIN for short. And in 2012, Kirstie Haywood and colleagues followed it in their systematic review of PROMs for ME/CFS.

They paint a dismal picture of the quality of PROMs for ME/CFS and a lack of robust enough development and evaluation, including for the Chalder fatigue scale and the first version of the other PROM primary outcome for physical function (SF-36: they used version 2). Neither is strong enough to lean on for ME/CFS. The result for the evidence on ME/CFS treatment, they believe, is as devastating as the implications for AHRQ on diagnosis:

[F]ailure to measure genuinely important patient outcomes suggests that high quality and relevant information about treatment effect is lacking.

Lisa Whitehead did a pre-COSMIN systematic review in 2009: she came to the same conclusion about the lack of adequate evidence to support the Chalder fatigue scale.

Depressing, isn’t it? But it’s not surprising, either. These kinds of weak links in the science of doing research are common, fueling confusion and controversies. When the trial’s results were published in 2011, consumers studying the trial had a wealth of methodological weaknesses to zone in on – and plenty of information on the science of doing research to point the way.

I think this brings us to one of the important lessons from this controversy: involving a consumer organization isn’t likely to be enough, on its own, to prepare for wading into a controversial area where there is a strongly networked community with a lot at stake. If your methods aren’t in very sound scientific territory and your findings aren’t welcome, you will get hammered.

That could, and should, have a positive impact on research standards. “[Taking] sides in pre-existing methodological disputes” is one of the main ways HIV activists came to have such an influence on the conduct of clinical trials, according to Steven Epstein’s analysis.

Let’s jump forward to the current battleground with consumers over the Cochrane review that included the PACE trial, supporting its conclusions about benefits of CBT and GET and lack of effectiveness of its pacing intervention. Systematic reviews like the ones from Cochrane, AHRQ, and NICE are critical influences on the policy and clinical impact of a trial, and potentially for research funding. That’s especially key for one as big as PACE in an area where stronger trials that could confirm or challenge its results can’t be taken for granted.

Robert Courtney and Tom Kindlon are both people with lives severely affected by ME/CFS, who critiqued the PACE trial intensively. They posted detailed analyses of the Cochrane review and its verdict on the PACE trial and other evidence in Cochrane’s commenting system. Their comments from 2015 to 2016 raised important issues, but the review’s authors rather brushed off their concerns.

There is only 1 Cochrane review journal, but the reviews are produced and edited in Cochrane review groups, not centrally. There are 53 of those groups. The group that deals with ME/CFS is the Common Mental Disorders group, reflecting the disproportionate power base that psychiatry and psychology have established around this illness and its treatment. Courtney complained to the editor in chief in February 2018, when the review group was so unresponsive.

In November 2018, Cochrane reported action. Sadly, Courtney didn’t get to see this: he died by suicide in March, after a long struggle with health-related problems. Cochrane attached a note to the review, stating that Cochrane’s editor in chief, David Tovey, was not satisfied that the authors had made enough revision to their review in response to Courtney’s feedback, and a full update was in the works:

The Editor in Chief and colleagues recognise that the author team has sought to address the criticisms made by Mr Courtney but judge that further work is needed to ensure that the review meets the quality standards required, and as a result have not approved publication of the re‐submission. The review is also substantially out of date and in need of updating.

That update hasn’t been published yet. It could take a while, especially if a new set of editors is dealing with it in a new review group. That’s not just because of different people. Cochrane review groups have some standardized methods they are supposed to adhere to, but there can be big differences, too.

Courtney’s complaint also raised concerns about another review in progress at Cochrane. It was a review based on individual patient data from the exercise review. Tovey and his colleagues reviewed the version that had been submitted for publication by Cochrane too, and it was rejected. The already-published protocol for that review was then retracted (withdrawn in Cochrane-speak, with no reason given). And finally, the review group and Tovey agreed to transfer the responsibility for ME/CFS Cochrane reviews to a different review group, in acknowledgment of the fact that it isn’t a mental health disorder.

That’s a lot to achieve from consumer pushback on research, so kudos to Courtney and Kindlon for their effort. But will the changes in the updated review be critical enough of the evidence base, and closer to the AHRQ position? I think they should be. And I think the reasons for that are included in Courtney’s and Kindlon’s comments. There are a lot of them, but I’ll focus on one major one: the risk of selective reporting bias. (Note: I’ve removed and described or linked the references in quotes below for simplicity.)

From Kindlon’s comment (page 72 of PDF of the Cochrane review):

I don’t believe that White et al. (2011) (the PACE Trial) should be classed as having a low risk of bias under “Selective reporting (outcome bias)”. According to the Cochrane Collaboration’s tool for assessing risk of bias, the category of low risk of bias is for: “The study protocol is available and all of the study’s pre-specified (primary and secondary) outcomes that are of interest in the review have been reported in the pre-specified way”. This is not the case in the PACE Trial.

He’s right. The criteria for “low risk of bias” he referenced came from the table in 8.5 of the Cochrane Collaboration’s handbook.

The PACE trial began recruiting participants – and therefore collecting data – early in 2005, and the protocol was published in 2007. Data collection ended in January 2010. There was a data analysis plan, but it wasn’t published till 2013 (after the results of the trial). At some point between the protocol and the final data analysis, they dropped a primary outcome and elevated a secondary one to primary status, and lowered the thresholds for what would be classified as “recovery”.

Before and after this change, there were different ways of interpreting the data from the Chalder fatigue scale. The one in the protocol set a higher bar for benefit than the one they swapped over to: had they stuck with it, it would be harder to conclude one treatment option had better results than another. And that was, according to their first results publication, the intent:

Before outcome data were examined, we changed the original bimodal scoring of the Chalder fatigue questionnaire (range 0–11) to Likert scoring to more sensitively test our hypotheses of effectiveness.

Here, responding to criticisms about this, they said they made the change:

…because, after careful consideration and consultation, we concluded that they were simply too stringent to capture clinically meaningful recovery.

Here, they wrote that the first way of using the fatigue scale came from levels in the small 1993 Chalder paper, and a larger Chalder paper in 2010 was responsible for re-calibrating their approach. That 2010 paper didn’t measure fatigue in people in ME/CFS.

Courtney, in his comments on this point (May 2016), wrote that something else happened early in 2010, too – PACE’s so-called “sister trial”, FINE, published their results:

The FINE trial investigators had found no significant effect for their primary endpoint when using the bimodal scoring system for Chalder fatigue but determined a significant effect using the Likert system in an informal post-hoc analysis.

The same thing happens, apparently, with the PACE data. In 2016, Carolyn Wilshire and colleagues (including Kindlon) published a re-analysis of the data made publicly available after a freedom of information request:

Publications from the PACE trial reported that 22% of chronic fatigue syndrome patients recovered following graded exercise therapy (GET), and 22% following a specialised form of CBT. Only 7% recovered in a control, no-therapy group. These figures were based on a definition of recovery that differed markedly from that specified in the trial protocol…

When recovery was defined according to the original protocol, recovery rates in the GET and CBT groups were low and not significantly higher than in the control group (4%, 7% and 3%, respectively).

The second threshold for fatigue had been lowered so much, they point out, that 13% of the participants were “recovered” by that measure before the trial treatments started.

The PACE trial authors responded, accepting the accuracy of the re-analysis. They pointed out, as they did in their original paper, that there is no gold standard way of measuring recovery from ME/CFS. They also wrote a paper on recovery in the PACE trial, which is about more than the primary outcomes.

The Cochrane review authors defended their assessment of low risk of reporting bias by arguing that it was a reported protocol variation, and therefore not a problem. Their note on their risk of bias judgement quotes a sentence from the trial publication which points to a second source of selective reporting:

“These secondary outcomes were a subset of those specified in the protocol, selected in the statistical analysis plan as most relevant to this report.” Our primary interest is the primary outcome reported in accordance with the protocol, so we do not believe that selective reporting is a problem.

This is problematic for several reasons. Firstly, the primary outcomes differed from those in the protocol. The review authors argue because they were still specified before data analysis, “it is hard to understand how the changes contributed to any potential bias”.

The Cochrane Handbook states clearly in the rationale about selective outcome reporting: “The particular concern is that statistically non-significant results might be selectively withheld from publication”. What if you change your mind about an outcome after data collection has ended, for a trial going on in your own clinic, because you are now concerned that your results won’t be significant? As Courtney pointed out “Investigators of an open-label trial can potentially gain insights into a trial before formal analysis has been carried out”.

Secondly, the Cochrane reporting bias criteria are for the study as a whole – not just for primary outcomes. What’s more, they did rate one other study (Wearden 1998) at high risk of reporting bias – and that was based on concerns about secondary outcomes (here). And that was valid. That is explicitly stated in the Handbook, too:

Selective omission of outcomes from reports: Only some of the analysed outcomes may be included in the published report. If that choice is made based on the results, in particular the statistical significance, the corresponding meta-analytic estimates are likely to be biased.

I think rating the PACE trial at high risk of selective outcome reporting bias would be consistent with Cochrane methods and the rating for Wearden 1998.

Where does all this leave us? We’re still only arguing about subjective measures: there still isn’t much evidence of benefit on objective outcomes at all. That leaves us in uncertainty. We don’t know how different approaches to exercise, “graded” or “paced”, really compare yet. There is too much uncertainty.

A similar message has been coming from multiple trials of GET – but only one particular form of pacing has been tested in a trial. The message could be fairly consistent on GET because it really does have a small amount of benefit for a minority of people (about 15% over and above regular care in PACE). Or the message could be fairly consistent because the trials have all relied on similar and deeply flawed methods. We won’t know till there are more rigorous methods to use to test these questions, and strong large trials are done that learn from the errors in the story so far.

This clash around the PACE trial raised other critical issues about the practice of science – especially around openness and response to consumer criticism. Activists pounded away to get access to data, using a variety of official channels and a lot of pressure. There’s a lot to learn from this. We obviously still haven’t got open data practice sorted out yet.

In this case, defensiveness won out over transparency, and that was throwing gasoline at a fire. Although official processes have seen important data and other information released, the researchers have also removed a lot of material that used to be in the public domain. So much so, that it’s now impossible to assess for yourself some of the concerns, because the materials have disappeared. That’s bad enough for any research, but it’s unacceptable for a publicly funded clinical trial.

Instead of responsiveness to criticism, some – not all – researchers have put massive effort into discrediting the activist community and rallying other researchers to their defense. It’s been a collective ad hominem attack. Being responsive when consumers are making mistakes or unjustified criticisms isn’t always easy, especially when there’s a barrage, with extremists in the mix. But it’s not only consumers who do that, is it? And there are important and legitimate issues here – not just people who don’t agree with the results.

This kind of clash happened to me early in my involvement in research, and I’ve written about that before. I don’t think there’s any shortcut. People need to keep listening and responding though, and as many bridges need to be built as possible. Carolyn Wilshire, a psychologist co-author of the PACE trial data analysis, has written of the concerns about researchers’ therapeutic allegiances and other relevant points. People’s positions can make it very difficult for them to be fair when developing and doing research. Even if they weren’t entrenched beforehand, they can quickly become so when their research is under attack. People shouldn’t take it personally, but they do. And the time that it will soak up isn’t usually factored into the research process.

As Epstein pointed out from analyzing the battles over HIV clinical trials, getting these relationships right can advance science and social progress – even though that’s not always easy. As he said, you can’t grab onto a consumer group in a controversial area “solely in passive terms – as a resource available for use, or an ally available for enrollment”. But that’s too often how public involvement in science is approached. (A recent systematic review on patient and public involvement in clinical trials, for example, had as its primary outcomes effects on recruiting and retaining participants.) We have to get past that, and deal better with these kinds of clashes. They are inevitable.

As for the dispute over the validity of key methods here? I think the balance should, and ultimately will, tip towards the ME/CFS consumer movement on this particular contested evidence.

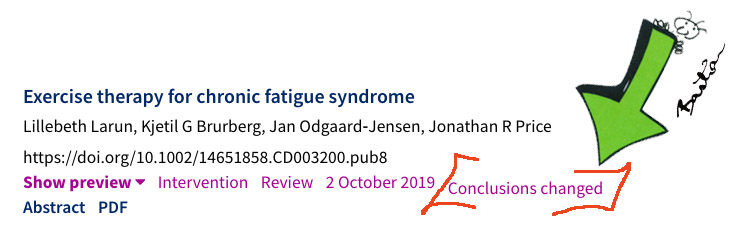

Development in October 2019:

The Cochrane review was amended, with changed conclusions – and a new review with a role for consumers announced. More in my post, It’s a Start: The Amended Version of the Cochrane Review on Exercise and CFS.

Development in February 2020:

~~~~

I have only focused here on a few aspects of the disputes about the PACE trial. If you want to read more, MEPedia is a great resource for exploring the critiques and the researchers’ responses.

I am grateful to the many people who have urged me to look at the issues related to the PACE trial and the related Cochrane review in recent years: you were all right – it was worth the effort. (I’m not naming any, as there were so many over the years that I’m sure to leave someone out: this was a mountain made of molehills.)

Disclosures: I was a health consumer advocate (aka patient advocate) from 1983 to 2003, including chairing the Consumers’ Health Forum of Australia (CHF) from 1997 to 2001, and its Taskforce on Consumer Rights from 1991 to 2001. I have not experienced CFS/ME and nor has anyone close to me.

However, at the time I first encountered CFS activists, I had a relevant personal frame of reference. I had to leave my occupation several years prior after a severe bout of repetitive strain injury (RSI) following a stretch of workaholism as a teenager. Then, RSI was regarded by some medical practitioners as malingering or psychological, rather than a physical condition. (You can read about the controversy around Australia’s RSI epidemic here, here, and here [PDF].)

As then editor-in-chief of a consumer health information website based on systematic reviews at the NIH, I was pressured about the inclusion of systematic reviews on GET and CBT and approach to CFS consumer information, but not by CFS activists.

I was the consumer representative from the foundation of the Cochrane Collaboration in 1993, and leader of Cochrane’s Consumer Network from its formal registration in 1995 to 2003. I was the coordinating editor of a Cochrane review group from 1997 to 2001.

One of the authors of the planned Cochrane individual patient data review, the protocol of which was withdrawn, is my PhD supervisor, Paul Glasziou. We have not discussed it, and have not discussed anything related to this post while I was considering, researching, and writing it.

Updates 17 February 2020: Added the announcement of Cochrane’s independent advisory group.

I also belatedly replaced the explanation of pacing, in response to comments from Valentijn, Ellen Goudsmit, and Nasim Marie Jafry. My thanks to Valentijn, Ellen, and Nasim, with apologies that I didn’t do it straight away (I thought I had – ouch!). The original version of this post was as follows:

The UK group, Action for ME, describes pacing this way:

Taking a balanced, steady approach to activity counteracts the common tendency to overdo things. It avoids the inevitable ill effects that follow. Pacing gives you awareness of your own limitations which enables you positively to plan the way that you use your energy, maximising what you can do with it. Over time, when your condition stabilises, you can very gradually increase your activities to work towards recovery. [PDF]

Updates 18/19 February 2020: Fixed a broken link and changed “massive effort into discrediting the whole community” to “the activist community” after feedback from a reader who prefers their feedback to be confidential.

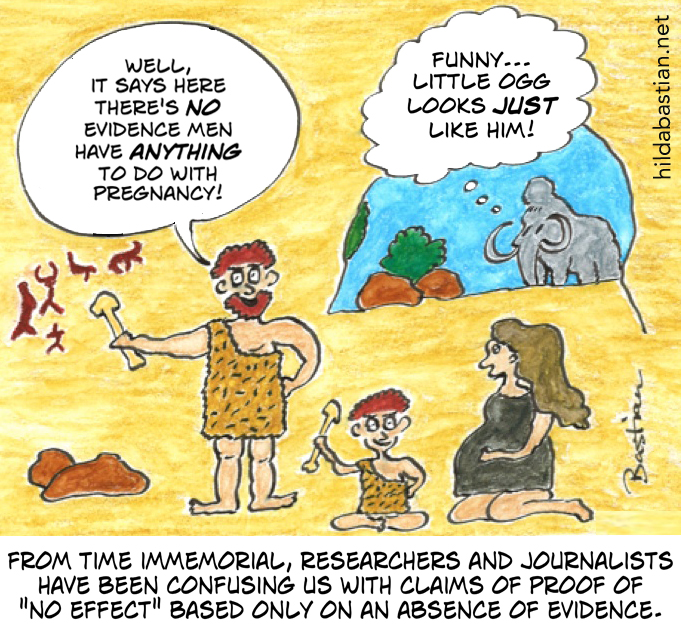

The cartoons are my own (CC BY-NC-ND license). (More cartoons at Statistically Funny and on Tumblr.)

Thank you for a balanced analysis. Sadly we see too few of them in this area. It is a very difficult area to summarize: it must have taken some time!

The only brief addition I would like to make is that in all the cases where objective assessments have been used (walking tests, step tests, employment, actometers), no real improvements have been found for either Cognitive Behaviour Therapy or Graded Exercise Therapy (the two main therapies that are promoted). My conclusion would be that CBT and GET are effective only in getting patients to regrade their answers on questionnaires.

Sadly, trying to bring this all to light has been a terrible burden on folk who suffer severely from their illness.

Thank you for this well researched article.

There is now also evidence that the PACE authors knew that the originally planned actigraphy-based outcome would likely show no improvement, and that it was dropped for this reason [1].

Studies of exertion intolerance in ME/CFS using the 2-day cardiopulmonary exercise testing method reveal a decline in peak oxygen consumption, maximal workload, and other parameters on the second day [2]. This is objective evidence that ME/CFS is associated with an abnormal physiological response to exertion and that it cannot be viewed merely as state of deconditioning. The reports of harms associated with graded exercise therapy need to be taken much more seriously. The PACE trial’s reporting of harms was problematic for several reasons [3].

1. Comment on the Science for ME forums https://www.s4me.info/threads/pace-trial-tsc-and-tmg-minutes-released.3150/page-8#post-57463 based on the PACE trial TSC and TMG minutes which are available here https://www.s4me.info/threads/pace-trial-tsc-and-tmg-minutes-released.3150/

2. Cardiopulmonary Exercise Test Methodology for Assessing Exertion Intolerance in Myalgic Encephalomyelitis/Chronic Fatigue Syndrome https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6131594/

3. Trial By Error, Continued: Did the PACE Trial Really Prove that Graded Exercise Is Safe? http://www.virology.ws/2016/01/07/trial-by-error-continued-did-the-pace-trial-really-prove-that-graded-exercise-is-safe/

A brilliant analysis and article, thank you so much.

Two minor points: First the AfME description of pacing is actually what is commonly referred to as “GET-lite”. There is no goal of increasing activity levels in real pacing, though pacing can indeed help in stabilizing patients by avoiding exacerbations – which may result in patients being able to consistently do a bit more, or at least stop temporary or long-term decline. The suggestion that it can result in recovery is complete nonsense, and the statement that patients will be able to increase activity levels carries the same insinuations and associated harms as it does when promoted as part of GET. AfME has made some vast improvement in recent years in response to patient pressures, but old biopsychosocial habits are hard to break, apparently.

Secondly, as a possible point of interest, Simon Wessely co-founded the Common Mental Disorders Cochrane group which laid claim to ME/CFS, according to an extensive CV of his floating around the internet. He also has been a key player in advancing the biopsychosocial treatment of ME/CFS, with little involvement in researching actual mental disorders – hence it seems likely that that group was initially founded for the primary purpose of controlling the reviews (and thus narrative) of ME/CFS. Cochrane has also historically operated on a first-come-first-served basis if I understand correctly, where the first group to claim a disorder controls the reviews of it, and they can block other groups from getting involved. Perhaps this was a useful approach when Cochrane was starting up and needed something to offer reviewers in exchange for doing the work that sustains the organization, but it has been abused for decades and it’s past time to end that approach.

Wow . A brilliant representation of the uk’s woeful research record on ME and why the patients are so angry. Please forward to Wessely and co. Very very insightful

Thank you, Hilda Bastian, for this well-written article and all your time and effort you put into it.

Thank yo for a breath of fresh air on this!

very interesting thankyou. It’s good to have ‘outsiders’ consider what’s happened and how it happened in a balanced way that considers the issues with an open mind.

I was on the Pace Trial allocated to cbt. I had Post extertional malaise before starting pace, BUT didnt’ understand that it existed nor that I had it until on the Trial.

I do remember them saying that if I wasn’t already involved in me/cfs groups pre trial not to join until after the trial, I can’t remember why, it’s so long ago, but I think something to do with trying to keep my knowledge level consistent through the trial? seemed reasonable to me, albeit somewhat inconvenient atthe time as I’d intended to join the local group around the same time. For me, I wasn’t even convinced I was unwell nor that me/cfs existed (despite having to work part time since 2001 because of it) right through til I went severe in 2013.

So I think one aspect that’s not been explored so much is the participant’s ignorance. I ended up on the trial cos my gp told me to google my symptoms and see what it most resembled and he’d refer me on that basis, I went back with well it seems to be this me/cfs but not sure, so he sent me to neurology to get known physical issues excluded, then I ended up at Trudie Chalder’s clinic at kings crying in her office cos I was desperate as it was preventing me from my ability to work and I could see myself homeless and penniless in pretty short order.

So to me, they said there wasn’t a magic bullet but they hoped they’d be able to help me do more by the end of it. But in reality I ended up doing far less, but it didn’t hurt so much symptomwise. And I was hugely disappionted.

What annoys me is the way that my data and that of the other participants was twisted to fit their narratives, I joined the trial because I thought well it may help others and if it doesn’t work they offered me the therapy of my choice afterwards (didn’t happen cos the pacing therapist was off sick) and it cut out the waiting list. So a mix of personal gain with a side of potentially helping others.

I don’t understand why the BMA opinion piece about latest report from the HRA about whether or not the trail protocols were changed correctly or not seems to think that following set procedures absolves the pace researchers from responsibility. it doesn’t. throughout history people have followed set procedures to do great evil. jsut cos it’s not illegal doesn’t mean it’s right. and THAT is something the entrenched researchers are refusing to recognise, the MORALITY of changing the criteria for recovery to lower than that for entry, is totally unsustainable as a position for research that purports to find ways to cure people.

Thank you for a thorough and balanced treatment of the history and some of the underlying scientific questions in this debate.

I am slightly concerned by the characterisation of an ‘evidence community’ that is distinguished from the ‘patient community’. We should remember that patients’ own lived experience is also evidence and there are severe risks for liberty if clinicians (or others) demand that people ignore that evidence, or interpret that experiential evidence in one particular way or another. This is particularly important when we consider emerging bodies of evidence suggesting various metabolic differences between people with ME/CFS diagnoses and healthy controls, as well as evidence from patients reporting that they experienced relapses from following recommended treatments.

The problem is that there is a significant power imbalance between the clinicians and researchers defending the biopsychosocial model and ME/CFS sufferers who have to live with the consequences of treatment guidelines and public policies built on what appears to be a very weak evidence base.

Paulo Friere reminds us that: “Washing one’s hands of the conflict between the powerful and the powerless means to side with the powerful, not to be neutral.” – so at the very least, we should be calling for significantly more research on ME/CFS applying tight inclusion criteria” and (in the case of clinical trials) careful attention to objective outcome measures.

Many fair points but also some b…t. AFME’s description of pacing is a nice way of describing GET. It’s not pacing as promoted by all other groups. And are they suggesting one can recover from ME? Perhaps only 6%. As for the change of scoring the Chalder Fatigue Score, I was partly responsible for that. It was good science to change to the Likert method. Nothing odd at all.

Perhaps one day soon, people will venture outside of group think and check that what they write is accurate. AFME supported the PACE trial and are hopeless when it comes to pacing. Which is sad as I helped them in the early days.

The criticisms re the PACE trial began in 2004. Kindlon and others came much later.

As a referee, I’ve seen the ‘son of PACE’. You have some time to recheck info and not blame White and colleagues for following evidence-based advice from two scientistspatients.

Thank you so much for such a well written article!

A well done and welcome commentary. May I add that data from PACE indicates that 47% of trial participants reported a co-morbidity of depression? This may well have contributed to such positive results from GET (15%) as you note.

Such doubling up on pathologies in PACE and in the Oxford definition also may contribute to acceptance of a “mental disease” interpretation in the UK and Commonwealth countries. The US Fukuda definition, much used in research, specifically excludes patients with a psychiatric co-morbidity.

Massively useful; I’ve bookmarked it. Thanks!

So informative. Thanks for bringing your expertise to this field and it in such an approachable manner.

Thank you for your excellent post, Ms. Bastian. I share the same concerns you wrote about, including the conflation of fatigue, post-exertional fatigue, and post-exertional malaise (PEM) among researchers and clinicians. PEM includes but is not only about fatigue. Patients and research participants also experience post-exertional sleep disturbances, problems thinking, sore throats, among other symptoms. No trials to date have truly measured PEM as an outcome.

Our group published a paper on PEM examining it last year on PLOS One which can be accessed here:

https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0197811

Thank you for this ME/cfs article!

Thanks for the brilliantly detailed account of a difficult and contentious area.

Would you agree that part of the problem is that ME/CFS is unlikely to be a single condition, so any criterion for people “having ME/CFS” is likely to be vague and fallible?

Thank you for this well-researched, insightful and reasoned article. An excellent look into the true issues of poor scientific methodology and the ad hom attacks on critics who in reality consist of reputable scientists and scientifically literate patients (some of whom were themselves scientists, doctors, statisticians, etc before falling ill), not the ‘anti-science militant patients’ meme that always gets trotted out in order to discredit justified criticism. Thank you for showing things as they really are.

“The close associations disclosed by ME/CFS researchers in the biopsychosocial camp … have the potential to be conflicts of interest – and they are certainly seen that way by many.”

More than ten years ago the UK Parliament Group on Scientific Research into ME (The Gibson Inquiry) called for an investigation into the well known and publicly documented conflicts of interest:

“CFS/ME is defined as a psychosocial illness by the Department for Work and Pensions (DWP) and medical insurance companies. Therefore claimants are not entitled to the higher level of benefit payments. We recognise that if CFS/ME remains as one illness and/or both remain defined as psychosocial then it would be in the financial interest of both the DWP and the medical insurance companies.”

“[There are] numerous cases where advisors to the DWP have also had consultancy roles in medical insurance companies. Particularly the Company UNUMProvident. Given the vested interest private medical insurance companies have in ensuring CFS/ME remain classified as a psychosocial illness there is blatant conflict of interest here.” [1]

—-

“Follow the money” is a cliche for a very good reason. It’s well past time for a proper investigation into this massive scandal.

[1] http://erythos.com/gibsonenquiry/Report.html

Thanks, David – I didn’t spend a lot of time reading up on that part, but I think it’s clear that there have been people with a mixture of issues in the trials. And it makes sense that people would also be getting this diagnosis who maybe shouldn’t be (and vice versa). I think there are probably degrees of vagueness and fallibility, and the AHRQ review convinced me that this situation could be improved by what is already known. I very much hope the research trying to pin down causal factors bears fruit. People really need it.

I want to thank you for taking the time to read and look into this topic. I have ME (under whatever imperfect name we have) and appreciate those who take the time to read, look, and listen at all that has occured within the perceptions of this illness and what it has cost people with it. The time spent listening, hearing, and writing about it is appreciated.

Thank you, Hilda, for an excellent, well researched article. I have been living as an ME sufferer in this medical dystopia since mid-1980s. I was diagnosed with myalgic encephalomyelitis (ME) by an NHS consultant neurologist in Glasgow in early 1984 after becoming severely ill with Coxsackie B4 virus at end of 1982 – there was an outbreak of CBV in west of Scotland at this time. I had an abnormal muscle biopsy and EMG, which helped confirm the diagnosis of ME. I had never heard of ME or Coxsackie and I will never forget the catastrophe and physical hell of ME punching into my life – I was an 18 year old undergraduate.

I remain ill today.

I have lived through the subsequent reframing of ME as ‘CFS’ by a group of UK psychiatrists, in early 1990s, including those involved in the hugely controversial PACE trial. It is astonishing to see how their own *beliefs* about ME became the dominant narrative.These researchers will often say in the small print that ME and CFS are not the same illness but will go ahead and conflate them anyway.

‘Classic ME’, the exclusively *post-viral* illness I was diagnosed with, described by the late infectious diseases consultant Dr Melvin Ramsay, has been effectively ‘disappeared’. And it is patients like myself and many thousands of others in UK who have paid the price. As you have highlighted, the Oxford criteria are overly inclusive and do not require the hallmark symptom of ME, which is muscle fatigability after trivial exertion.

A devastating, complex neuroimmune illness has been ‘diluted’ by these doctors to mean any illness that has idiopathic fatigue. The fact that exertion-intolerance is our key symptom has been ignored to our utter detriment. The fact that exertion *consistently* exacerbates our symptoms is interpreted as patients focusing too much on symptoms, and us perpetuating our illness with psychological factors. It is insulting beyond belief.

And whenever we have pointed out the harms of criteria conflation we have been ignored and maligned and misrepresented – almost wilfully and gleefully – in the media. Health editors and journalists also bear responsibility for keeping these psychiatrists’ harmful beliefs about ME afloat. And because we resist an inappropriate – and therefore harmful – mental health diagnosis we are accused of stigmatising mental illness. That is the stick they always use to beat us – instead of actually answering valid criticism.

The fact that we have lost our lives to this illness, unable to fulfil our potential, as well as suffering hideous physical symptoms on a daily basis has fallen on deaf ears. No matter our lived experience, our former jobs, our achievements, we have had no agency, we have effectively been ‘othered’ by the medical establishment. Pushing a biopsychosocial model of the illness – and, to be honest, saving money – has apparently been their only goal. The reality of ME patients’ lives has long been lost.

I am also glad that others have pointed out that Action for ME’s information on on pacing is misleading and harmful. Pacing is not an option, it is essential, it is how we survive and endure the illness. But it does not ensure a gradual recovery, ever. And tragically so many of us have made ourselves more severely ill and disabled by pushing ourselves, through ignorance.

Yet those who have become more ill after being prescribed graded exercise therapy (GET) are being wholly ignored by PACE team. It is unsurprising that MP Carol Monaghan who has brought the PACE trial to be debated in Parliament has said that ‘‘When the full details of the trial become known this will become known as one of the biggest medical scandals of the 21st century’.

I can’t think why your book hasn’t been made into a film. It has all the right ingredients for an Oscar winner.

There’s a chance that when understood that it will be like chrysanthemums. Lots of plants that look like chrysanthemums are descended via different pathways and belong to different genera and this only became apparent when genetic profiling became possible.

It’s important to recognise that work has already been done into the metabolism and genetics of patients diagnosed with M.E and this isn’t the picture that it’s creating. The picture is that criteria that don’t accurately describe the symptoms patients complain of produces a cohort whose illness can be better explained by a number of other diagnoses’. However when the criteria is a more accurate refection of what patients complain of there is a greater degree similarity. It’s more likely that M.E is a condition that can be arrived at via number of pathways than a number of conditions

Thank you Hilda for looking into the issues related to the PACE Trial and the connected Cochrane review. I’m glad you found it to be worth the effort! I particularly enjoyed reading your concluding remarks:

“As for the dispute over the validity of key methods here? I think the balance should, and ultimately will, tip towards the ME/CFS consumer movement on this particular contested evidence.”

For ME/CFS patients, this can’t happen soon enough! There is so much at stake. Thank you again for the time and effort on this topic and for bringing these issues to light.

Thank you so much, Hilda for your fantastic piece and very apt cartoons. It really is such a relief to read such a fair and well-informed analysis.

It is particularly refreshing to see you identify the ‘collective ad hominem’ that has been plaguing coverage of these issues and stigmatising people with ME/CFS. It is something that has concerned many patients for a long time and I’m sure that many would very much appreciate you focussing more on this issue in a future post.

You mention the Cochrane review and the poor responses/brush off the review authors gave to Courtney’s concerns. It was upsetting to see recent media reports using Cochrane’s plans to withdraw the review in response to Courtney’s complaints as an example of science being distorted by ‘activist’ abuse and harassment. It is so clear that Courtney took a careful, reasonable and cautious approach to engaging with research that was affecting his life, putting a lot of time and effort into work that really shouldn’t have been his responsibility on top of all his health problems.

This can be seen is this Reuters piece from Kate Kelland, which includes several quotes from Colin Blakemore, who was Chief Executive of the Medical Research Council when it provided extensive funding and support for the PACE trial, and who whilst there dismissed concerns about the trial: https://www.reuters.com/article/us-health-chronicfatigue-dispute/exclusive-science-journal-to-withdraw-chronic-fatigue-review-amid-patient-activist-complaints-idUSKCN1MR2PI.

No right-of-reply is granted to those, such as Tom Kindlon, who have for years been making reasonable, legitimate critical comments on PACE and the Cochrane review.

This was followed by a Guardian Science Weekly podcast on the Cochrane ME/CFS and PACE issue in an episode asking ‘what role should the public play in science?’:

https://www.theguardian.com/science/audio/2018/nov/02/what-role-should-the-public-play-in-science-science-weekly-podcast A transcript of the podcast is available here: https://www.s4me.info/threads/the-guardians-science-weekly-podcast-2-november-2018-what-role-should-the-public-play-in-science.6474/page-3#post-117883

PACE researchers Michael Sharpe and Kim Goldsmith are podcast guests. Their depiction of critics of the Cochrane review and PACE is deferentially left unchallenged by the host. Again, no right-of-reply is granted to critics. Neither the Reuters piece nor this podcast provide any information about the legitimate concerns raised about this work, instead leaving audiences to assume that all criticism is unreasonable.

When the media and researchers encourage the view that the only way patients can have the scientific record corrected is to engage in abusive behaviour, ignoring or misrepresenting exemplary examples of patient engagement like the above, then this surely encourages the worst sort of relationship between patients and researchers.

In any diverse group there will be people making unreasonable or extremist points (as the discussion of almost any political controversy on social media so regularly shows). The attempt to define patient critics by prejudiced claims about their methods and motivations, or only referring to the very worst of them, is misleading and unfair. It is particularly harmful when it functions as a stigmatising ‘collective ad hominem’ against a group of ill people who have in many instances made great sacrifices to engage with research.

Thank you for the time and balance Hilda.

I have read with many cups of coffee and have so much to say but my main points are:

We all miss the point that is the PEM Exertional Malaise and the fact reading a book can set it off gets missed. Cognitive exertion is a s just as bad as walking too far. When you combine the two it can make the ME patient go deep down into a PEM state.

The other thing that gets missed is the fact Sophia Merza autopsy was when the PACE trial was recruiting and no one thought to stop and rethink? We have two autopsies that show the same dorsal root ganglionitis and patients should be told this fact, so that they may make an informed choice on treatment. It also should be stipulated that any form of PEM is harmful and those harms reported.

Sports therapists and top athletes now understand over training and show us what damage can be done by pushing the body. The cycle of PEM shows us damage to the ME patient, yet we ignore this. the NICE guidelines stipulate a Heart rate monitor should be warn, but I have yet to know of any trial or ME/CFS centre that use them, now why is that?

This shows what can happen and we need to build ME around the same understanding.

https://www.telegraph.co.uk/health-fitness/body/much-exercise-bad-gut-dangers-training/

Every patient should learn to track their PEM and every trial should note this down too and work with it. Not to do this is irresponsible.

Hi Hilda! Great article. I was wondering if you could elaborate on this passage here:

“AHRQ argued that you need specific ME/CFS symptoms in your criteria – like experiencing post-exertional malaise. The PACE trial measured that specific symptom, because it was a secondary outcome. So how many of the trial participants had it before the trial treatments started? The baseline data show it ranged from only 82% in the GET group to 87% in the treatment-as-usual group. That underscores AHRQ’s point about the risk of the Oxford criteria, doesn’t it?”

I can appreciate the importance of having a “hallmark” symptom being used in the clinical criteria for identifying ME/CFS, but it seems to me that the PACE trial (in spite of its other weaknesses) successfully captured a cohort of true ME/CFS patients if 82-87% percent of the cohort reported having post-exertional fatigue prior to intervention. Since clinical presentation varies from patient to patient, surely 8/10 patients reporting having a hallmark symptom of a disease is within expectation? You seem to imply that that’s low and thus a source of weakness or grounds for criticism.

Maybe I misunderstood the point you were making. Would love clarification, thanks!

Yes, I think it’s low – especially given the small number of people who reportedly benefited. That means it’s possible that there was an apparent benefit because it benefited a subgroup of people who didn’t actually have the condition. That just leaves a question mark surrounding who any beneficial results could be applying to.

The results were similar for the patients meeting the London criteria (where PEM was a required feature), so I don’t think it’s correct to say that the patients who improved didn’t have the condition.