Does Our Poor Remembrance of Things Past Doom Much Nutrition Research?

Just how reliable are our memories of what we ate? How about what we will admit to having eaten? Questions like these have caused fierce public debate in the last few years. The value of much nutrition and diet research hangs on the answers.

Some researchers have been arguing that assessing energy intake by self-reported questionnaires yields data that’s irretrievably bad, and it should be abandoned. Others defend the usefulness of the data that most of this branch of science is based on. And the status quo pretty much rolls on. I’ve been wondering: is only one side right, could both sides be partially right – or is there just not enough evidence to settle the argument?

First up, the common ground between the critics and the defenders: the results of questionnaires are seriously error-prone. That high level of bias – 20-25% underestimate in large-scale surveys – comes, according to James Hébert and colleagues, from:

1) portion-size estimation errors; 2) omissions of foods consumed; and 3) self-report biases (e.g., social desirability or overweight/obesity status).

Hébert’s group presents the case for the status quo: collecting data in ways that avoid the worst of the known pitfalls, and then adopting a statistical mitigation strategy. Which means forms of analysis and statistical modeling that weed out detectable problems and take expected levels of remaining error into account. (Here’s a systematic review of common statistical measures used in nutritional epidemiology.)

That requires a lot of confidence in how much we know about the inherent biases and how they are likely to affect any given study. I think it’s hard to be confident that everything is so settled, though. Hanna Lee and colleagues argued in 2015, for example, that gender hasn’t been taken into enough count, and the differences could be a big deal. A different analysis by Laurence Freedman & co didn’t find a gender difference, but found that BMI affects reliability differently. You can take an intense journey down a rabbit hole here. So it’s not surprising that one of the arguments I have seen in defense of these techniques is that they are fine if they are in the hands of people with enough expertise.

Amy Subar et al mount a stronger case than that, though, in support of a role for memory-based research methods. They argue that “the magnitude of underreporting is not as abysmal Archer et al estimate”, and reports of some foods and nutrients are more reliably reported than others. In short, that there are some babies still in this bathwater.

Edward Archer is a strong advocate of ditching the bathwater, once and for all. He and co-authors penned that case again in a paper published at the end of 2018. The flaws of memory-based methods are simply “fatal” and “pseudoscientific”. But as a rebuttal published with it shows, there are flaws in these arguments too – like the claim that measuring the validity of memory-based methods is unfalsifiable, never measured against anything objective.

Archer’s argument includes the claim that “human memory and recall are not valid instruments for scientific data collection”. That’s absurd, so he lost me pretty early on. Psychologist Ralph Barnes painstakingly analyzed Archer’s paper, the rebuttals, and Archer’s rebuttal of the rebuttals. He concluded both sides were cherry-picking to make their own side look rosy and the other bad. That really doesn’t help us get anywhere, is it?

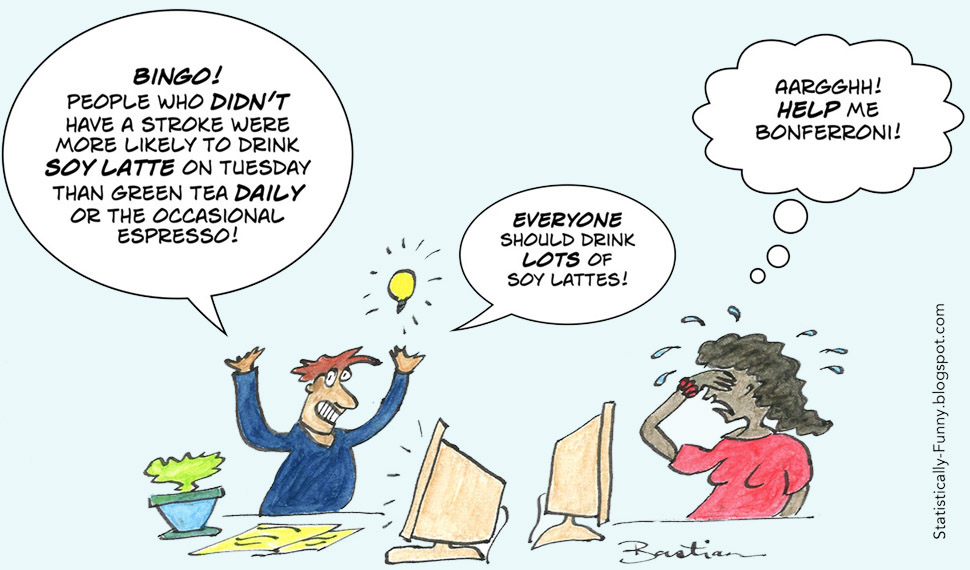

Nikhil Dhurandhar and colleagues are also on the side of abandoning self-reports, but they are much more measured. They argue that the errors self-reports produce “are decidedly not random”. Well, that makes mitigating bias after the event about as easy as removing mincemeat from lasagna. The only option, they say, is to work harder on developing feasible objective measures that avoid this complex tangle.

That’s an appealing argument to me. But it’s not just that alternatives need development. Some of the measures they point to as needing work are photography, chewing and swallowing monitors, and wrist motion detectors. Luke Gemming and colleagues did a systematic review of the photography options, like taking a photo of what you’re about to eat/drink with your phone or a wearable camera. These methods, too, they point out, could be prone to some self-reporting bias, as well as differences in reliability along, say, age lines.

If you are wondering about more biochemical methods, that route isn’t going to solve all the problems either. (See for example here, here, here, and here). As they say, “challenges remain”.

It doesn’t take long delving into this area to understand why the status quo prevails. The sheer weight of all that research and expertise seems to generate comfort and inertia. There are critical issues to health here, and carrying on with finessing the least worst traditions must seem better than letting go. Dhurandhar & co argue, though, that sometimes, doing nothing is better than doing something, and this is one of those cases. Some of the alternatives aren’t great, they say, but they are better than what we rely on now, so that is the way forward.

I found that argument convincing, but Subar & co also made a good case for self-reports, in some circumstances at least. Still, it seems overall safer to be dubious about nutrition claims from this kind of study.

Back in 2013 I wrote about the origins of beliefs about dietary fiber and cancer, arguably the first big “nutrient X causes disease Y” fad. I talked about an analysis of 34 cases where there were claims based on epidemiological data that had also been tested in randomized trials. In only 6 did they come to the same conclusion. If those continue to be the odds, it’s not reassuring, is it?

~~~~

For a broader overview of issues in the reliability of nutritional science, check out Christie Aschwanden’s “You can’t trust what you read about nutrition”.

If you want to read more about how diet is measured, there is a detailed technical chapter by Frances Thompson and Amy Subar at the NIH [PDF].

The cartoons are my own (CC BY-NC-ND license). (More cartoons at Statistically Funny and on Tumblr.)

Here’s my 2 cents – we keep self-reported data, because we have no choice. But we do two things to make its use more rigorous.

1) we prioritize the more accurate methods. If the 7-day food diary is more accurate than the FFQ, then the media should publicize Malmö Diet and Cancer study results over say NHS/HPFS findings, and the Malmö Diet and Cancer study people should publish more independent papers instead of allowing much of their research to be subsumed into EPIC-Europe and aggregated with all its FFQ studies as currently seems to be happening.

2) FFQ data is validated against 7-day food diary sub-sampling. This gives coefficients which can be extremely weak; I’ve read of an 0.14 validation coefficient for saturated fat. 0.4-0.5 is normal. That could be characterized as likely to be about half-wrong. In such cases, shouldn’t the 95% confidence intervals be widened by the extra degree of uncertainty revealed by the validation coefficient?

FFQ data is what it is – inaccurate and approximate – so why should it be fed through the exact same statistical methodology as if it’s as accurate as the result of a blood test?