The Skills We All Need to Move Past “Anti-Science” and “Us”

If you didn’t believe a prevailing scientific position, you used to be part of a small fringe. To get information on your side, you would hunt around a certain type of bookshop, and subscribe to newsletters with distributions so small, they would be addressed by hand. A few journalists occasionally gave your position fleeting access to a wider public, but it wasn’t enough to gain much traction.

Of course, that was before the internet. It’s a whole new ballgame now. Information and misinformation can hurtle freely between large numbers of people via social media and email. What would once have stayed a fringe issue now has a medium that can enable it to grow into a cultural force.

Scientific consensus isn’t even holding reliable sway over people with science degrees in the US any more. According to the 2015 Pew Internet/AAAS survey, around 40% believed genetically-modified foods are unsafe to eat and didn’t believe human activity is causing serious global warming. As anthropologist Elisa Sobo and colleagues argue:

“The idealized expert-generated, one-way, authoritative reign of science is over”.

We are all part of the information ecosystem in important ways now. We haven’t had all that long to learn how to adjust to either it, or the massive increase in the quantity of scientific outputs. We need new approaches and sets of skills. And we need to reconsider the way we think about rejection of scientific evidence, too.

When we have questions or need to make a decision, we might turn to some kind of authority for answers. But that’s not all we do. Things will stick in our minds when we’re cruising around the internet, and almost all the time, the context or source won’t stick with it. We’re not assembling a neat, coded catalog of only-reliable information in our minds.

The Sobo paper describes a common approach to forming our opinions and reaching decisions:

“Habits of mind fostered by Web 2.0 demand of us continuous interrogation using multiple sources…[This leads to] self-curated assemblages of ideas drawn from multiple sources using diverse criteria”.

Sobo and co. call it “Pinterest thinking”: curating a basis for opinions and decisions the way people curate personal collections of images on Pinterest (a kind of virtual scrapbook). Other people’s experiences, study results, interpretations of scientific information, and viewpoints could all be in the mix. A narrative is stitched together, sometimes out of contradictory parts.

This isn’t anti-science, even when it leads people to reject a scientific consensus. Few probably reject all science, although many don’t give it the weight we might like. And there’s a lot of bad science that’s no more reliable at coming to a conclusion anyway. Systematically reviewing what’s known, using methods rigorously designed to reduce the impact of bias, is a lot of work and it’s still not the backbone around which most studies are done and interpreted.

I don’t think we should be so quick to use pejorative terms like anti-science, denialism, and conspiracism. Take conspiracy, a word that gets thrown around a lot in science-related conflicts. It’s one of the ultimate “them” words, isn’t it? We collaborate, coordinate, and influence: they conspire and infiltrate.

One of the consequences of conflict-ridden communication is a spiral of silence that can distort our understanding of what most people are really thinking [PDF]. Retreating into echo chambers name-calling those in other echo chambers is a way to rally some troops, and that wins some kinds of battles. I doubt it spreads consensus and learning, though. We’ve rejected hostile environments and shaming in education for good reasons.

There are situations that demand confrontation, of course (more on that here). But when it comes to discussion about scientific evidence and controversy, I think the onus of proof of greater benefit than harm lies on the habitual use of polarizing communication strategies.

There’s another fundamental reason to move away from speaking about the rejection of scientific evidence in terms like anti-science. It positions irrationality “out there”, completely separate from the way our own science-literate minds work. It’s just “them”, not us at all. We just have to persuade “them” to see the light. I think that diverts us away from where we need to start.

Conspiracism and denialism are parts of an unhappy cluster of bias and reasoning problems that aren’t just close to each other – they overlap and intertwine. Motivated reasoning and confirmation bias are key common threads. They are problems for us all. When we get behind weak science and exaggerated claims because it fits our side of an argument, it makes us less trustworthy – and gives opponents a stronger case.

The deep and wide grasp of beliefs contrary to the weight of science isn’t fully explained by differences in education or religious and political affiliation. That even so many people with science degrees aren’t immune to industrial and ideological “merchants of doubt” suggests traditional approaches to scientific literacy aren’t enough.

Last month I was one of the invited presenters at a National Academies of Science meeting on a research agenda for the science of science communication. I spent a lot of time catching up on literature in a variety of fields and thinking about this problem, and I plan to write that up formally.

I came to the conclusion that there are 4 important areas of skill for all of us: debiasing, critical thinking, (social) media literacy, and counteracting biased communication. Unfortunately, they don’t yet have a strong “how to” base of empirical real-life evidence that we know applies in the internet age. And we’re paying a heavy price for that. There are enough hits and misses now, though, to point us in some directions.

1. Debiasing techniques

This is the hardest and the most important. Just knowing about cognitive biases and the danger they pose to our own thinking, decisions, and actions doesn’t protect us.

Balazs Aczel and colleagues point out that we need to deeply internalize the importance of cognitive bias, have skills to head specific biases off before they derail our thinking, recognize when we need to apply them, and then control ourselves well enough to use those skills effectively. None of that is easy or second nature.

Scott Lilienfeld and colleagues sum up the situation with our knowledge here:

“Psychologists have made far more progress in cataloguing cognitive biases than in finding ways to correct them…

“In particular, more research is required to develop effective debiasing methods, ascertain their crucial effective ingredients, and examine the extent to which their efficacy generalizes to real-world behaviors and over time”.

In the absence of strong evidence, this is the best advice I’ve got about honing and using debiasing skills:

- Slow down. In fact, stop. Between a thought and a reaction there is a space – go back to it. Think this through.

- Why are you agreeing with or dismissing this study, argument, or claim? Does it make you feel good? Vindicated? Indignant? Anxious? Your emotions might point to your biases.

- When it comes to research results, would you take this study as seriously if its conclusions were the opposite? If not, that’s another sign to be careful.

- “Hands up who’s not here!” I used to have a cardboard hand I’d hold up for this when I did advocacy training workshops. What and who is missing is always important. If you’re looking at a study, what other studies corroborate or contradict it? You can’t usually rely on the authors of that study to answer that question for you, unfortunately. Who do the results apply to? Who could be more or less helped and harmed by an argument?

We don’t call controversies “hot button issues” for nothing. People are trying to push your emotional buttons. Don’t make it so easy for them. Value the places and writing that encourage deliberative thinking. It takes time: but we pay a price for taking too many shortcuts on something that matters.

2. Critical thinking skills

This is a goal of most education, isn’t it? But we’re not as good at it as we think we are – and nowhere near good enough at it for the information overload and internet age. (To be reminded of how much, click on the “science degree” tab and open up some of the results for the 2015 survey about the gap between public and scientists’ views.)

Critical thinking skills include a wide range like logic, recognizing methodological and cognitive bias, and statistical literacy. The Aczel paper I discussed above pointed to evidence that this isn’t working all that well – again, partly because of people not applying the skills widely.

Statistical skills might be particularly valuable, but they’re in scarily short supply – even among many scientists for whom they are tools of the trade.

We’re going to need a lot of work in specific areas of knowledge, both for formal education and outside. Here’s an example. There is an international group working together to increase critical thinking about the effects of healthcare treatments, including improving the theoretical and empirical basis for doing it. You can see more at Testing Treatments Interactive. [Disclosure: I’m a little involved with TTi, mostly in connection with my Statistically Funny cartooning.]

Here’s another example. Know Your Chances is a book that’s available for free online. Written by Steven Woloshin, Lisa Schwartz, and Gilbert Welch, it’s a guide to understanding some basic health statistics and research biases. And the book’s effects have been empirically tested – it could increase your skills.

3. (Social) media literacy

Media literacy includes critical thinking skills, but it’s also “the ability to understand, analyze, evaluate and create media messages in a wide variety of forms” [Edward Arke and Brian Primack]. Social media makes our need to develop these skills even more acute.

I got sidetracked from working on this post this month by a classic example of confirmation bias in social media. I saw a blog post on what was obviously a seriously problematic study and couldn’t believe it had been covered at all. Then someone I admired tweeted about it. And another. And then another.

Fortunately most people held back and that study didn’t go really viral. It made me wonder, though: is there no research too poorly conceived, executed, and reported for people to take seriously as long as the authors’ conclusions are “interesting”? (I wrote about it in my previous post on research spin.) The issue of inhibition failure that dogs how often and how well we apply critical skills gets really acute on social media. Social media is a great test of impulse control!

Social media are part of the problem and the solution. The internet and social media offer a great opportunity for democratization of knowledge and information sharing. We’re creating small and large circles of influence, but there’s a lot we still have to learn about how to do it well.

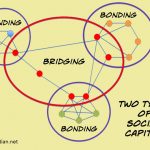

In social capital terms, there’s a lot of very strong bonding going on in social media. So much, that echo chambers and information bubbles are a real problem.

We’ve got a lot to learn, though, about how to create bridging capital effectively on social media if we want scientific consensus to spread. As well as how to ensure that there is strong diversity in new media. Social influence works strongly among people who we see as peers. If for no other reason than this, a lack of diversity in the public faces of science is an urgent issue.

4. Counteracting biased communication and studies

The weakness of the evidence in this area surprises me the most, I think. I started my list with the hardest, and I’m ending it with what should be the easiest to study and to do.

There are incredibly difficult challenges here – especially how we can become more comfortable with uncertainty, communicate it well, and increase other people’s tolerance of it, too. But some shouldn’t be so hard – like not using humor and snark in ways that make things worse. (A particular pitfall for me as a cartoonist, obviously. I think I blew it here, for example.) And how to become skilful at detecting serious bias in others.

We need robust empirical studies in this field, as well as up-to-date rigorous systematic reviews. The research needs to be open access, so that community members and communication professionals can learn from it – and so they can help shape an agenda for research that is of practical value, too.

Take the issue of whether there is a backfire effect when trying to debunk misinformation about vaccines. I quite quickly found 5 studies based on CDC information (there could be more), but no systematic review. From a quick look, my guess is we might not have a solid basis for practice yet.

There’s a lot of weaknesses and authors coming to conflicting conclusions. That’s not surprising, as there are lots of differences – people studied include students, primary care patients, people from a research panel, and people from Mechanical Turk, for example.

But look what’s happening. Study A was in 2005 [PDF], B in 2013, C in 2014, D in 2015 [PDF], and E a few months later in 2015. B and E cite A, but C and D don’t. B isn’t cited by C, D, or E. It’s no surprise then, is it, if everyone else doesn’t realize how fluid this situation is?

[Update 20 November 2016] This month, key authors of C and D acknowledge, with the findings of non-vaccine studies, that their conclusions about a backfire effect may not have been justified. (I think the questions remain far from settled.)

[Update 1 April 2017] A study in a hypothetical situation, again non-vaccine, found that repetition improved the effectiveness of retracting misinformation: the opposite of a backfire effect. This study’s authors included Stephan Lewandowsky, one of the authors of the Debunking Handbook – a major promoter of belief in the backfire effect in science communication. (I still think the questions aren’t settled.)

Scientists could be doing a whole lot better at helping us spread an understanding of what we know from science!

“Our product is doubt”. That’s a famous quote from an industry insider trying to counter scientific studies that threatened commercial interests. Naomi Oreskes’ work offers important insights into that process, and the inherent challenges of communicating science’s hedged and complex relationship with doubts and certainty [PDF]. We need to help people put things into perspective, now more than ever before. Since the idealized, central authority era is over, and the democratized knowledge era is here, our starting point isn’t really any “them”. It’s ourselves.

Follow-up post: 3 things to do to become part of virtuous spirals of trust in science.

~~~~

Feel like reading about statistical biases now? Join Gertrud the unhappy early bird at my post about selection bias, length bias, and lead time bias (Statistically Funny).

Or for some exercise in critical thinking, check out How to Spot Research Spin: The Case of the Not-So-Simple Abstract.

Correction on 3 April: The original had mistakenly stated 30% of people with science degrees believed GMOs are unsafe to eat, in the 2015 Pew/AAAS survey: it was 39%.

The cartoons are my own (CC-NC-ND-SA license). (More cartoons at Statistically Funny and on Tumblr.)

Sources for the timeline:

- 1993: The first browser for the World Wide Web, and around when emails began.

- 1996: Greenpeace anti-GM protest at the 1996 World Food Summit in Rome.

- 1998: Ground zero for the claim of a link between MMR vaccine and autism – February press conference at the Royal Free Hospital in London, on the publication of Andrew Wakefield’s Lancet paper (2010 retraction notice). (Around 25% of the US were Internet users that year.)

- 2000: 50% of people in the US were Internet users.

- 2004: Facebook launched in February.

- 2006: Twitter launched in July.

- 2009: Climategate email controversy.

- 2009: 80% of people in the US were Internet users.

- 2014: 87% of people in the US were Internet users.

- 2015: Gallup Poll in 2015 asked do you personally think certain vaccines cause autism: 6%, yes a cause; 41%, no, not a cause; 52% unsure. (See also: 10% believed vaccinations are one of the 2 main causes of autism in a 2013 survey via Mechanical Turk by Gwen Mitchell and Kenneth Locke [PDF]; Harris Poll in 2013 finds 33% of parents agree vaccines “can cause autism”.)

* The thoughts Hilda Bastian expresses here at Absolutely Maybe are personal, and do not necessarily reflect the views of the National Institutes of Health or the U.S. Department of Health and Human Services.