5 Things We Learned About Peer Review in 2019

For something so fundamental to the practice of science, it’s perplexing that it took so long for serious research into editorial peer review to get off the ground. The earliest experimental study I could find was published in 1977, and there still aren’t many of them. I guess it’s a classic case of fish not seeing the water they’re swimming in.

I’ve written a couple of posts covering milestones in peer review research up to the end of 2018. If you want to catch up on the main things we know about peer review at journals, there are links to those at the bottom of this post. Now let’s get straight to the year’s highlights – and please let me know via the comments or Twitter if you know of more studies that have moved us forward.

1. Peer review might sometimes be a kind of academic matchmaking, increasing the chances of future scientific collaboration.

[Exploratory study of co-authorship networks.]

The data come from only one journal, it wasn’t a dramatic phenomenon, and there are other possible explanations for the results. So there are big caveats here. Still, this study adds some substance to the theory that editors selecting peer reviewers could be influencing future co-authorship. And it certainly broadens the perspective we should have of peer review’s potential impact.

Pierpaolo Dondio & co studied authorship networks of authors and referees at an interdisciplinary journal on understanding social processes using computer simulation. They analyzed authorship patterns for 2,232 author/referee couples participating in double-blinded peer review of 761 manuscripts.

Dondio and his colleagues classified the degrees of separation authorship networks at intervals. And over time, more became co-authors than you would expect by chance:

[W]e found that 99 referee-author couples (6.65% of the total) reduced their distance from >3 to ≤3 degrees, against only 0.84% of couples in the random sample. 284 couples (19.08% of the total couples) that had a >4 distance during peer review reduced their distance to ≤4 over time, against 6.61% of the random sample. 28 couples took less than a year to reduce their distance by more than one step, and 44 took less than two years. Furthermore, 28 couples (1.88% of the total couples) reduced their distance to 2 degrees, against only 0.13% of couples in the random sample. Seven couples arrived at step 1 by publishing an article together a year later.

Now, they note that could just be a natural form of concentration of expertise. But they cautiously hypothesize that peer reviewers could be following up published papers they reviewed, and establishing new collaborations.

This isn’t the first time anyone’s studied whether there’s an impact on peer review recommendations when there’s a close relationship between the peer reviewer and one or more of a manuscript’s authors. But the exploration of impact on later scientific collaboration appears to be new.

Dondio’s group concluded:

Peer review is not only a quality screening mechanism for scholarly journals. It also connects authors and referees either directly or indirectly. This means that their positions in the network structure of the community could influence the process, while peer review could in turn influence subsequent networking and collaboration.

Pierpaolo Dondio and colleagues (2019). The “invisible hand” of peer review: The implications of author-referee networks on peer review in a scholarly journal.

2. Peer reviewers may provide no line of defense against authors’ conflicts of interest.

[Randomized controlled trial.]

If this trial at the Annals of Emergency Medicine is any guide, we can’t count on peer reviewers to provide a shield against bias arising from authors’ conflicts of interest (COI).

The trial, by Leslie John and colleagues, included 838 reviewers of 1,480 manuscripts between 2014 and June 2014 and January 2018. The peer review process went completely as usual for that journal, except for revealing the COIs or not: it was double-blind peer review, and the editors didn’t know there was a trial going on. There were no other big changes at the journal at the same time.

The ratings the peer reviewers were asked to make were for the usual issues the journal asks them to assess, including for example, “Presents and interprets results objectively and accurately”. As well as the trial, there was a survey of the peer reviewers afterwards. That survey found, John et al reported, that the peer reviewers “considered COIs to be important and strongly believed they could correct for the biasing influence of COIs when disclosed”. In practice, though,

[D]isclosure had no significant effect on reviewers’ evaluations of manuscripts judged by these measures, even when we limited the sample to manuscripts in which authors reported conflicts [*], and even when we tested for an effect of disclosure by using a variety of different specifications (appendices 8 and 9). Almost none of the reviewers who received the COI disclosure reported having substantially changed the free text of their review in response to that disclosure. Because these are the two major mechanisms by which reviewers might identify and/or correct any bias for editors (and subsequently readers), these findings suggest that disclosure, in its current form, may not be providing the information and guidance that reviewers need to correct for COI induced bias.

* Caveat: the trial was powered to detect an overall effect, but not an effect for only those manuscripts with COI.

Again, this is just one study, at just one journal. But what now? John et al wrote:

In one sense, this ineffectiveness is not surprising, given that reviewers are not given explicit guidance on how to correct for possible COIs. If a disclosure reveals an important COI, should the reviewer recommend rejection? Apply special scrutiny to methods? Consult additional experts? No consensus exists about how reviewers should respond to COI disclosures; nor, hence, is any direction provided about how to do so.

Journals may therefore seek ways of enhancing the use that reviewers make of authors’ COI disclosures. We suspect that such approaches would need to go beyond merely increasing reviewers’ attention to disclosed conflicts. Editorial intervention might be helpful; editors might routinely enlist a highly qualified methodologist or an extra reviewer to evaluate manuscripts with serious COIs.

Leslie John and colleagues (2019). Effect of revealing authors’ conflicts of interests in peer review: randomized controlled trial.

3. Peer reviewers’ sign their own names more often when they are recommending acceptance of an article.

[Two analyses of peer review reports.]

We already knew that people can be reluctant to be known to give a thumbs down to a manuscript. Now, 2 studies seem to confirm that those systems where signing is optional could be introducing new forms of bias we don’t yet understand.

In January, Giangiacomo & co reported on an Elsevier pilot in 5 journals, with 9,220 manuscripts and 18,525 reviews between 2010 and 2017. Knowing their peer reviews would be published didn’t deter people from reviewing or change much, when they knew they had the choice of signing the report or not. That said, older academics were deterred a bit, and younger ones and non-academics were more keen to review.

Overall, only 8.1% agreed to have their names public, and they appeared to be much more likely to be cases where the referees recommended accepting the article for publication.

(I couldn’t find the names of the 5 journals in the article, but they are here: Agricultural and Forest Meteorology, Annals of Medicine and Surgery, Engineering Fracture Mechanics, Journal of Hydrology: Regional Studies, International Journal of Surgery.)

In November, confirmation of this phenomenon arrived from Nino van Sambeek and Daniël Lakens, although the rate of signing was much higher at the open journals they studied: 37–38%. They studied peer review reports published for articles accepted in 2 PeerJ journals (8,155 altogether) and 2 journals from the Royal Society (RS) (3,756 from RS Open Science and RS Open Biology). Signed reports recommended rejection 2% of the time; unsigned ones, 10% of the time.

They concluded:

Together with self-report data and experiments reported in the literature, our data increase the plausibility that in real peer reviews at least some researchers are more likely to sign if their recommendation is more positive. This type of strategic behavior also follows from a purely rational goal to optimize the benefits of peer review while minimizing the costs. For positive recommendations, reviewers will get credit for their reviews, while for negative reviews they do not run the risk of receiving any backlash from colleagues in their field. It is worthwhile to examine whether this fear of retaliation has an empirical basis, and if so, to consider developing guidelines to counteract such retaliation.

(Disclosure: that last sentence cites an argument I made in a blog post, that retaliation is an issue beyond peer review, and we should work out how to address the problem, not just develop elaborate systems to work around it.)

Giangiacomo Bravo and colleagues (2019). The effect of publishing peer review reports on referee behavior in five scholarly journals.

Nino van Sambeek and Daniël Lakens (2019). Reviewers’ decisions to sign reviews is related to their recommendation.

4. Editorial peer review may be increasing the acknowledgment of study limitations – but without reducing study spin.

[Analysis of published peer review reports, before and after study.]

This would certainly explain one aspect of how internally contradictory so many study reports are, eh? I’ve always thought of it as horse-trading between co-authors, but editors and peer reviewers are introducing some of it, too.

Kerem Kerserlioglu & co studied original manuscripts and published reports of all the randomized controlled trials published in BMC journals and BMJ Open in 2015: 446 pairs of them. Their conclusion:

The average number of distinct limitation sentences increased by 1.39 (95% CI 1.09–1.76), from 2.48 in manuscripts to 3.87 in publications. Two hundred two manuscripts (45.3%) did not mention any limitations. Sixty-three (31%, 95% CI 25–38) of these mentioned at least one after peer review…

Our findings support the idea that editorial handling and peer review lead to more self-acknowledgment of study limitations, but not to changes in linguistic nuance.

This one is really one to lay totally on the doorsteps of editors, isn’t it? (See my post about the issue of editors’ roles generally.)

Kerem Keserlioglu and colleagues (2019). Impact of peer review on discussion of study limitations and strength of claims in randomized trial reports: a before and after study.

5. Peer review of scientific publications is not a fairly recent development – it’s even older than we realize.

[2 historical studies.]

In July, Aileen Fyfe and colleagues published an analysis of the growth of peer review at the Royal Society journals from 1865 to 1965. They dug through the records of 6,665 submitted manuscripts, of which only 665 were rejected. There were 3,716 authors and 1,015 referees. The evolution is fascinating:

[I]n the 1880s, referees had been asked for “your opinion as regards its eligibility for publication in the Philosophical Transactions”; what counted as “eligibility” was assumed to be tacitly understood…Two decades later, referees were told that papers in the Transactions should “mark a distinct step in the advancement of Natural Knowledge”…,and from 1914, referees were asked to look for “approved merit” in papers for both Transactions and Proceedings. The definition of “approved merit” would have been socially constructed within the Fellowship…

Our data show how that attitude to refereeing changed in the early twentieth century, as consulting at least one referee came to be seen as necessary for all papers published in the Society’s journals.

The history they chart is one of editors trying to manage the growth of submissions with the need to not over-burden referees: a battle they did not always win. Another interesting aspect of this paper? The growth of elitism:

[T]he post-1890 increase was largely due to a fivefold increase in manuscripts submitted by nonmembers…The proportion of manuscripts by nonmembers had been increasing through the late nineteenth century; but in the twentieth century, they would massively outstrip those authored by Fellows.

This transformation was a direct consequence of the fact that the Society did not grow in size to keep up with the scientific community in the early twentieth century.

The second paper landed in October, with Mark Hooper challenging the idea that scholarly peer review began when journals began. He presented 3 exhibits to support his case:

The first is an example of editorial review in ancient Rome. The second is an example of post-publication peer review involving scholia, beginning in the fourth century. The third is an example of pre-publication review by censors in the sixteenth to eighteenth centuries… What we now give the name peer review is really a group of things that has evolved over time. If we want to learn from the history of scholarly review, then we should take a broader and longer view.

Scholia, by the way, turns out to be what I call marginalia – scribbled notes next to text. Apparently, annotation is why margins were developed – who knew?

In other news, it turns out lots of us have something in common with Cicero! Hooper quotes a classicist writing about Cicero’s experiences with Atticus, who published some of his work:

Atticus marked passages requiring revision with ‘wafers of red wax,’ and Cicero waited ‘in fear of the wafers’.

And the next time you are juggling with feedback on a paper, it might be comforting to know you now have another thing in common with Cicero:

…since all manuscripts were copied by hand and distributed through social networks, authors were plagued by the problems of what we might now call version control. Cicero encountered this frustration at least once when he gave a draft to Atticus to provide constructive criticism while meanwhile working to improve it further himself. However, another Roman, Balbus, had copied Atticus’s version, so multiple versions of a work were circulating that was, in the author’s view, not yet complete.

There’s lots more – I can’t do Hooper’s paper justice: you just have to read it.

Aileen Fyfe and colleagues (2019). Managing the growth of peer review at the Royal Society journals, 1865–1965.

Mark Hooper (2019). Scholarly review, old and new.

It’s great that there’s enough research on peer review now that an annual overview is worth it. It’s great that there are so many published peer review reports, and that researchers are starting to study them. This potential is one of the arguments in favor of open peer review. But it’s deflating that with all those thousands of journals, and much more than a million manuscripts being reviewed each year, that I still only found a single randomized controlled trial in a year. (Please tell me I missed some!) If only there were more …

~~~~

If you are interested in the mechanics of the study of peer review, here are 2 reviews published this year that didn’t make my top 5: a systematic review of tools used to assess the quality of peer review reports (Cecilia Superchi & co found 24), and a scoping review of the roles of peer reviewers by Ketevan Glonti & co.

My posts on milestones in peer review research:

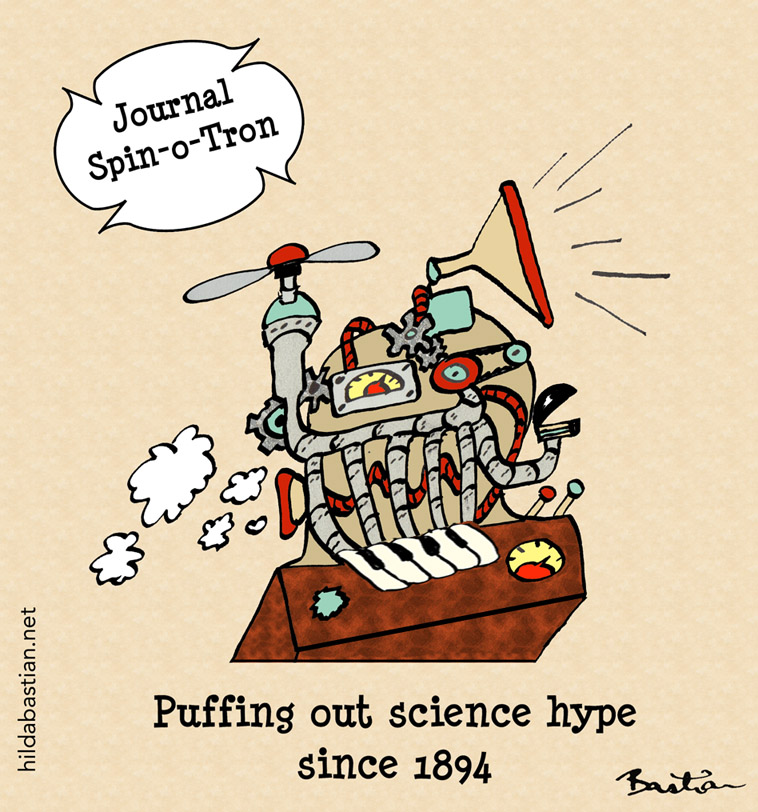

The cartoons are my own (CC BY-NC-ND license). (More cartoons at Statistically Funny and on Tumblr.)

The “Oh, the Things We Could Know!” pays homage to the glorious Dr. Seuss’ book:

You have brains in your head.

You have feet in your shoes.

You can steer yourself

any direction you choose.