This month, there was a thought-provoking 2-day workshop about preregistering studies, held at the Royal Society in London. The theme was “promises…

5 Key Things to Know About Meta-Analysis

Knowledge accumulates. But studies can get contradictory or misleading along the way. You can’t just do a head count: 3 studies saying yes minus 1 saying no ≠ thumbs up. The one that says “no” might outweigh the others in validity and power.

You need a study of the studies if you want to be sure what they add up to. And that comes with its own purpose-built set of statistical techniques. Meta-analysis is combining and analyzing data from more than one study at once.

Now that we’re increasingly flooded with data and contradictory studies, you’ll see meta-analyses more often. Here are my “top 5” concepts and facts to get a handle on them. [There’s a prequel here, and a sequel here!]

1. The plots are gripping – don’t just skip to the end.

When data can be pooled in a meta-analysis, they will often be shown in action-packed visual plots.

They’re called forest plots. (Don’t ask me why – no one seems to know. It may or may not have to do with seeing the forest, not just the trees. But it’s definitely not named after a Dr Forest!)

The main concept you need to make sense of a forest plot is the confidence interval (CI). It shows the margin of error around a result.

A confidence interval is what you roughly estimate when someone asks you, “How long will that take?” And you answer, “About 5 to 10 minutes” instead of “7.5 minutes.” It’s critical information. (More about confidence intervals here.)

A forest plot shows the spread of results in each study. This one includes 7 trials of ‘Scared Straight’ programs. Each horizontal line shows the results of one study. The length of the line goes from one end of the confidence interval to the other.

The vertical line in the middle is the point of no effect. If a study’s results so much as touch that line, then the result is not statistically significant. If a result were completely to the left, it would mean the study showed a decrease in crime. But the combined result is on the right, showing it increased the crime rate.

The diamond at the bottom calculates a combined result with a statistical model. The diamond will be spread out and thin if there’s not much certainty, but will get squat and big when the data are stronger.

Another plot that you might come across is the funnel plot. It’s checking if there is reason to suspect that negative studies might be unpublished, although the reliability of this technique is contested. (I’ve explained funnel plots here.)

2. Strength of evidence varies from outcome to outcome.

This is a critical issue – and not only for meta-analyses. Think of it this way. Say you did a survey of 25 people, and they all answered the first 2 questions. But only 1 person answered the last question. You’d feel pretty certain about the data from the first 2 questions, wouldn’t you? But you’d be none the wiser about the subject of the last one.

Same thing with studies and meta-analyses. For any specific question in a meta-analysis, a different number of studies is likely to have answers for it. Each study might have used a different measurement scale, for example. Many will not even have asked most of the questions in the analysis. And the quality of the data in the individual studies will vary too.

This is the most common trap people fall into about meta-analyses. They say (or think), “A meta-analysis of 65 studies with more than 738,000 participants found x, y and z.” But the answer to x may have come from 2 huge, high-quality studies with a lot of good data on x. The answer to y could have come from 48 lousy little studies with varying quality data on y. And the answer to question z….well, you get the picture.

3. Data choices and statistical technique can change a result.

When meta-analyses disagree, confusion can go meta, too. It can have a simple cause – such as the meta-analysts’ questions were not exactly the same, or one is more recent and has an important new dataset in it.

I go into this more deeply in this post. As I explained there, it’s a little like watching a game of football where there are several teams on the field at once. Some of the players are on all the teams, but some are playing for only one or two. Each team has goal posts in slightly different places – and each team isn’t necessarily playing by the same rules. And there’s no umpire.

Different statistical measures and models can be chosen. I’ll talk about some of the data issues specific to meta-analysis in a future post. One of the important ways of dealing with these issues is to pre-plan sensitivity analyses. When you know this or that choice might change the results, you can plan to do the alternative analyses to consider the impact. If you look at the ‘Scared Straight’ review, you’ll see they’ve done that. (No matter how they cut it, their conclusions stayed the same.)

4. All meta-analyses are not the same.

All sorts of datasets from studies can be meta-analyzed – and in different ways. A key question to consider is whether or not it was part of a systematic review that looked for all the relevant studies on the question.

Many people use the terms “systematic review” and “meta-analysis” interchangeably. But that’s risky, because you can’t take it for granted.

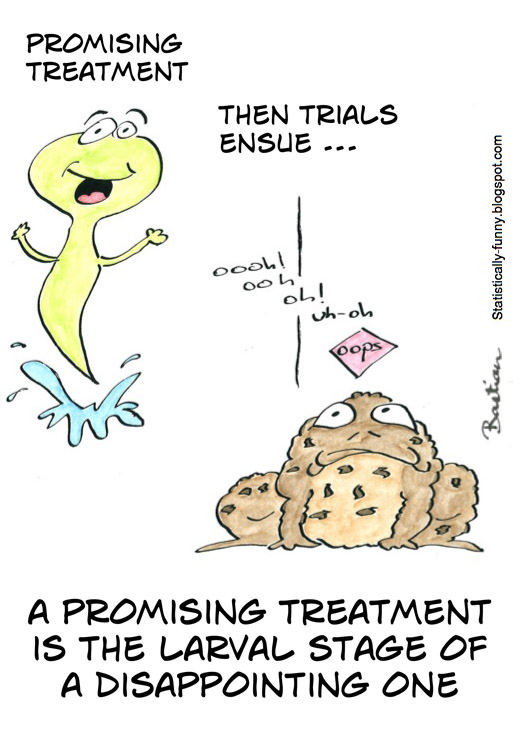

There are also different types of meta-analytic studies. The one in this toad cartoon, for example, is a cumulative meta-analysis. That’s plotting the result of the first study, then pooling it with the second one, then pooling it with the third one and so on. You watch the data shift over time with those.

Another type is the individual patient data meta-analysis based on clinical trials. Some or all of the trialists pool the original patient-level data – not just the aggregated data from the study report. That can get you a whole lot more precision, especially about sub-groups of participants.

A network meta-analysis is different again. It’s also called a multiple treatment (or mixed treatment) meta-analysis. It explores comparisons that were not done by the original researchers – for example, comparing two treatments against each other that were only tested separately in trials against placebos.

And then there’s the “Rolls Royce” of meta-analysis: the prospective meta-analysis. This may be the least biased way to approach multiple studies. Right at the start, the groups of researchers setting out to do the original studies agree on how they will pool and meta-analyze their studies, even before the results of the studies are known at all. Here’s an example.

5. Absence of evidence is not evidence of absence…most of the time.

Last, but definitely not least: just because a study doesn’t find evidence of something, that’s not the same as proving that it doesn’t exist. There’s more on that here.

It can be very convincing when more than one study comes up empty on something – and so easy to jump to the conclusion that there can’t possibly be anything there. But look carefully.

Meta-analysis may have been first used in astronomy and was refined in social science in the ’70s. It really took off in medical research recently. There are links to find out about its use in genome, ecology, crime, education, animal studies and more below.

Meta-analysis is a relatively new statistical technique, and it’s not all that widely understood. People can be very suspicious of things they don’t understand.

However the risks of trying to make sense of bodies of data any other way are enormous. Here is part of a forest plot of trials of a drug up to 1977, when a meta-analysis began to convince doctors of its value.

It’s no wonder there were competing camps on this drug. What hope would you have of working out its value from the pile of papers those lines represent? It’s now a standard treatment that reduces deaths right after a heart attack, but it wasn’t widely used for many years while the controversy raged.

Meta-analysis is complex and fallible. But consider the alternative.

~~~~

THE PREQUEL AND SEQUEL TO THIS POST!

5 Tips for Understanding Data in Meta-Analysis

Another 5 Things to Know About Meta-Analysis.

More Absolutely Maybe posts tagged Meta-Analysis.

To find systematic reviews of interventions in health care, with and without meta-analyses, try PubMed Health. (Disclosure: Part of my day job.)

Find out more about milestones in systematic reviews and meta-analysis in health in this article by me, Paul Glasziou and Iain Chalmers, with updated data here.

For meta-analysis in genome epidemiology, start with the Human Genome Epidemiology Network (HuGENet).

A starting point for systematic reviews in education, crime and justice, social welfare and international development is the Campbell Collaboration (C2). (I’ve also blogged here about scientific evidence in crime and justice.)

A starting point for systematic reviews on the environment is CEE – the Collaboration for Environmental Evidence.

For animal studies, start at CAMARADES – Collaborative Approach to Meta Analysis and Review of Animal Data from Experimental Studies.

The cartoons are my originals, mostly from Statistically Funny (Creative Commons, non-commercial, share-alike license).

The ‘Scared Straight’ forest plot comes from a systematic review by Petrosino and colleagues.

The excerpt from the Streptokinase forest plot comes from an article by Antman and colleagues.

* The thoughts Hilda Bastian expresses here at Absolutely Maybe are personal, and do not necessarily reflect the views of the National Institutes of Health or the U.S. Department of Health and Human Services.