Study Report, Study Reality, and the Gap Between

We take mental shortcuts about research reports. “I read a study,” we say. We don’t only talk about them as though they are the study – we tend to think of them that way, too. And that’s risky.

We take mental shortcuts about research reports. “I read a study,” we say. We don’t only talk about them as though they are the study – we tend to think of them that way, too. And that’s risky.

Even the simplest study is a complex collection of thinking and activities. You can’t capture it all, even when the methodology is documenting your thinking.

Artifacts bubble out of studies: scribbled notes, grant applications, protocols, presentations, datasets, images, graphs, emails, reports to funders, articles…When you attempt to condense it, there will always be selective representation.

The report can suffer from weaknesses that the study does not, and vice versa.

“Every scientific investigation is a story,” writes David Schriger, “and it is the details of the story that give it its richness.” You can’t interpret a study without knowing that story and the researchers’ thought processes, he argues.

“Every scientific investigation is a story,” writes David Schriger, “and it is the details of the story that give it its richness.” You can’t interpret a study without knowing that story and the researchers’ thought processes, he argues.

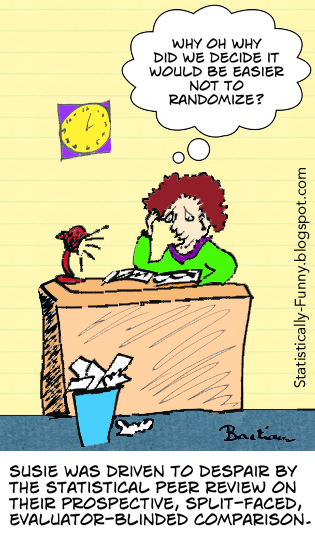

Between the “big idea” that sets someone off on an investigation and the conclusions at the end of it, are compromises and diversions that can shrink the capacity of the study mightily – almost beyond recognition even.

The investigation the researchers originally envisaged can be driving their confidence in the conclusions they reach at the end. Their actual study, Schriger says, can only support far more modest conclusions, so investigators make “bridging assumptions” that bolster what they write.

Writing up a study for publication brings temptations that conflict with faithful warts-and-all reporting. Trying to get attention, recognition, and influence on what others do make academic spin an existential threat to scientific perspective.

Critical discrepancies between the various artifacts of a study are common, according to a systematic review of studies studying clinical trials by Kerry Dwan and colleagues. Even from place to place within an article, key facts can differ. For some issues, like the reporting of subgroup analyses, agreement between sources for the one study may be more unusual than fidelity. Selective reporting can be pernicious.

Other people intrude, too, in the journey to a publication. Peer reviewers and editors dip their oars in, sometimes improving things but sometimes steering it all way off course.

Other people intrude, too, in the journey to a publication. Peer reviewers and editors dip their oars in, sometimes improving things but sometimes steering it all way off course.

We are supposed to be able to count on the reviewers and editors to protect against particularly dramatic differences between a study and a publication. But we can’t take it for granted even there that people are looking thoroughly at the study, not just the report.

Take this example of a trial that was widely reported in the media late last year: somewhere between the methods registered and publication, a placebo arm disappeared and another arm appeared. A discrepancy that surely requires explanation.

Open science is a partial pathway out of this. When more of the artifacts of investigations are out in the open and linked to each other, we shouldn’t be as vulnerable to academic spin. But we need to get used to being more thorough about assessing research.

Scientists being open about the story of how their work was shaped would help, too. When more of the story is out there, so is more of the nuance. And we can start breaking down the habit of thinking of articles as “studies”.

The cartoons are my own (CC-NC license). (More at Statistically Funny.)

* The thoughts Hilda Bastian expresses here at Absolutely Maybe are personal, and do not necessarily reflect the views of the National Institutes of Health or the U.S. Department of Health and Human Services.