I hadn’t been aware of the term science populism until a video about it did the rounds recently. It was a light-bulb…

Unpacking the Biggest Trial Yet of Naming Authors in Peer Review

This is a very important trial. I had been looking forward to its results since it started a few years ago. It’s far and away the biggest randomized trial of naming versus not naming manuscript authors to peer reviewers at a journal. It was run at Functional Ecology by Charles W. Fox and colleagues, and it was published earlier this year.

My heart sank when I started to read it back then, though. There are obvious major strengths of this trial – it was so large and randomized. But the way the results were reported made it clear I would need days to unpack the paper and data.

In this post, I dig into the details of this research, and then look at how it fits into the overall picture we’ve gained from previous randomized trials. I focus a lot on the weaknesses of the trial report here, because they lead me to a somewhat different conclusion than the authors.

The authors argue that their trial provides strong evidence that withholding the names of authors substantially reduces prestige bias at the end of peer review. I have somewhat less confidence in the strength of the evidence, and am unsure how big the effect was. Plus I’m doubtful about how much the result translates to the chances of being published at that journal, and how generalizable the results are to other journals.

This trial tackles a serious problem – peer reviewers giving some work an easy pass because of the names attached to it. Trying to hide the names of authors and peer reviewers – “double-blind review” – sounds like a straightforward way to level the journal playing field and thereby reduce prestige and other social biases at major journals. It’s anything but straightforward though.

For one thing, it’s not so easy to hide well-known authors from experts in their field, especially when it’s a small one. With the rise of preprints, it’s likely to be even harder to conceal authorship. One of the reasons it’s hard in ecology, according to the lead author in a blog post, is because “researchers are often associated with specific study organisms/locations and/or questions.”

Another major complication is the influence of editors all along the pathway to publication. I wrote about that in depth here back in 2017. Editors’ content expertise, biases, and priorities are critical – and so are incentives, such as wanting to publish articles that will get a lot of attention and citation. Editors can counteract some of the biases of peer reviewers – and they can add it themselves.

Occasionally we see studies of biases of individual editors (like this one). And as you’d expect from any group of people, their apparent biases vary a lot, and can have an impact on what gets published. The authors of a modelling study on the impact of biases in journal editorial processes concluded that biased editors could be a bigger threat than biased reviewers.

Consider these points along the path where editors’ judgments could be a thumb on the scale at this ecology journal:

- Deciding to reject manuscripts without going to peer review;

- Determining how many peer reviewers the manuscript gets;

- Choosing which peer reviewers to invite;

- Deciding whether to reject the manuscript after considering the peer review;

- Determining how to handle manuscripts that have been revised in response to the peer reviewers’ comments;

- Deciding whether or not to publish a revised article at the end of the editorial line; and

- Running a new editorial cycle for any manuscript that was re-submitted in a new version after having been rejected, but invited to re-submit after a major revision (such as adding new data).

Those early choices – like having only 1 peer reviewer when the usual was 2 – could reflect an element of predetermination by the editor, and spell the final doom for a manuscript.

These kinds of editorial decision points, along with whether peer reviewers knew who the authors were, are major confounding variables along the path to the outcome which matters – which articles finally appear in the journal. In the report for the new ecology journal trial, this range of variables isn’t adequately considered. And the fate of the manuscripts isn’t followed through to the end – only to the editors’ decision after the initial peer review. That decision is more complicated than accepting for publication or rejecting, which makes this issue particularly important for this trial.

The authors report that in the first 2 years of the trial, 53% of the peer reviewers in the “unnamed authors” group guessed whose research they were reviewing. Whether that affected results isn’t reported. On one level, that doesn’t matter: It’s the effect of the editorial policy that’s being tested. However, previous trials report this, and it can make a difference. The authors might be reporting this in a future paper.

Editorial bias isn’t thoroughly considered either. The question of editorial bias, though, points to an underlying issue with the trial and the results report. All 3 authors were editors at the journal, and they published their article in their own journal. That’s a lot of conflict of interest. As far as I can see, none of them have a strong track record in running trials in humans, and there was no statistician involved.

I think this might explain a fair bit about the problems with the paper. For example, it’s been standard for many years to have a detailed protocol with pre-specified data analysis plan for randomized trials by health and other researchers, including for journal-related research. Awareness of the importance of pre-registration of research is spreading, too. These authors don’t mention a protocol or pre-registration, and I couldn’t find one.

That’s not a small thing. I couldn’t find key details I needed to evaluate this trial in the paper, and that’s not unusual, given the space limitation. That’s not a problem when you can find that extra detail in the protocol.

We know a little about the intentions for the trial from a blog post back in 2019. The trial was supposed to run for 2 years, but it ran for 3. Reporting the reasons for this variation from the original plan and related practices around it matters. Though this may be because of something innocuous – like taking longer than anticipated to reach the target number of participants – it’s good to be reassured that trialists weren’t checking the results along the way and shifting the goal posts.

Even the issue of slow recruitment, if that’s what happened, has some relevance here. Authors were informed that if they submitted to this journal, their manuscripts would be in a randomized trial of naming versus not naming the authors. That may have affected some authors’ decision to submit their work elsewhere. If the submission rate was unusual for the journal, that would be good to know.

The protocol is critical, too, to assess bias in a trial report. You can check for questionable research practices this way – for example, by seeing which analyses were pre-specified. After running a survey, Hannah Fraser and colleagues concluded that questionable research practices appear to be as common in ecology as they are in psychology – which is very common.

In this case, the authors have fortunately provided a basic dataset. (It’s here.) On the plus side, this enabled me to reassure myself about several issues they didn’t report. That said, me trawling around in the data and coming to conclusions is itself a risky business!

The good news about editorial bias within this study is that editors weren’t more likely to reject manuscripts without sending them to peer review from the experimental (unnamed authors) group than the control group (their usual editorial practice) – but they were more likely to send manuscripts on when they were authored by people from nations that rank as highly developed and/or English-speaking. Those were the proxy measures for prestige in this trial.

They also computationally assigned gender to the names of the first authors and assessed that throughout too. I didn’t dig into this further, partly because I wasn’t convinced that method would uncover gender bias if it did exist, especially without data on the editors involved. (The authors concluded there was no effect on gender in their study.)

It wasn’t reported in the paper, but the dataset doesn’t show bias in the overall number of reviews sent to an unusual number of peer reviewers. However, this potentially confounding variable wasn’t included in any of the interaction analyses for subgroups that the authors reported, so we don’t know for sure. I’d feel more confident about this trial if I knew there had been a plan to thoroughly analyze for potential impact of all the editors’ choices, and that it was followed.

And there are some signs of editorial decisions swaying the results – I’ll mention one from the paper’s supplementary information later. I quickly saw another during my data trawling on this question – although you would expect spurious statistically significant findings in a dataset this large, and this could just be one of them. Therefore it’s not something to place weight on. The best mean score possible after peer review was 4, and it was highly likely that with a mean score of 3 or higher authors would get a full thumbs up to continue towards publication – but it was much more likely for ones that came from the experimental group. High scores were less likely when the peer reviewers were in the authors-unnamed group: Perhaps the editors had more confidence in high ratings when they were made by that group?

The issue of which manuscripts the editors didn’t reject after peer review brings us to the last major point I’m keeping in mind when I consider what weight to give the outcomes. When peer review was finished, authors could receive one of these decisions:

- An offer to further consider the manuscript based on revision after the peer reviewers’ feedback – corresponding to acceptance with revision at other journals;

- Rejection, but with the door open to consider the submission of a new manuscript; or

- Outright rejection.

For major analyses the authors report and rely on in their conclusions, they combine the first 2 as a positive outcome. The second category they describe as analogous to “declined without prejudice” at other journals. They say “many” of these are typically eventually published at their journal, whereas “nearly all” of the first category are.

From an author point of view, those 2 editorial decisions are very different outcomes, and only one seems to be an acceptance for publication. The much less desirable one was a very common outcome, so it affects the magnitude of effects. The authors include versions of the analyses they report in the paper in a supplementary file, including the results if you don’t combine the categories – and for one of the interactions related to prestige, the result reaches statistical significance for the combined outcome, but not for the group invited to revise only. In addition, the authors are telling us this will affect the likelihood of articles actually being published, which is the main outcome of interest.

These, then, are my conclusions about this trial:

- The trial is at low risk of bias for some features, but other apparent biases weaken the confidence we can have in its results.

- At this journal, withholding authors’ names appeared to reduce prestige bias after initial peer review. There’s a small amount of data still to come, so it’s not yet clear how big the effect was, and there are some caveats – for example, we don’t know whether or not the number of peer reviewers allocated by editors affected results.

- There are some signs that editors’ decisions after peer review re-introduced some bias after initial peer review.

- We don’t know what the impact of the policy was on the chances of an article being published, including to what extent the next steps in the editorial process might favor more advantaged authors or not.

How does this new evidence affect what we knew previously from randomized trials of revealing or concealing authors’ names in peer review at journals? Here are the 10 I know of, including this new one from Fox and colleagues:

| Randomized trial | Field | Journals | Manuscripts | Peer reviewers |

|---|---|---|---|---|

| Alam 2011 | Medicine | 1 | 40 | 20 |

| Borsuk 2009 | Biology | Hypothetical | 1 | 989* |

| Fisher 1994 | Medicine | 1 | 57 | 220 |

| Fox 2023 | Ecology | 1 | 1,432 | Not reported |

| Godlee 1998 | Medicine | 1 | 1 | 221 |

| Huber 2022 | Economics | 1 | 1 | 534 |

| Justice 1998 | Medicine | 5 | 118 | >200 |

| McNutt 1990 | Medicine | 1 | 127 | 252 |

| Okike 2016 | Medicine | 1 | 1 | 119 |

| Van Rooyen 1998 | Medicine | 1 | 467 (of which 158 were in a control group) | 618 (with 11 editors) |

The 3 largest of the previous trials didn’t all have interventions and outcomes in common with the new one (Justice 1998, McNutt 1990, Van Rooyen 1998). For example, the unit of randomization in 2 of them were peer reviewers, not manuscripts. The trial focus could be quality of peer review, not impact on bias. Meta-analyses including these 3 trials concluded that concealing authors’ names didn’t affect the quality of peer review reports or the rate of rejection, “but the confidence interval was large and the heterogeneity among studies substantial.” (Bruce 2016)

All 3 were in prominent medical journals, and the authors either concluded there was no impact of concealing authors’ names, or a small one only among peer reviewers who hadn’t guessed who the authors were. Only 1 of the trials studied more than 1 journal.

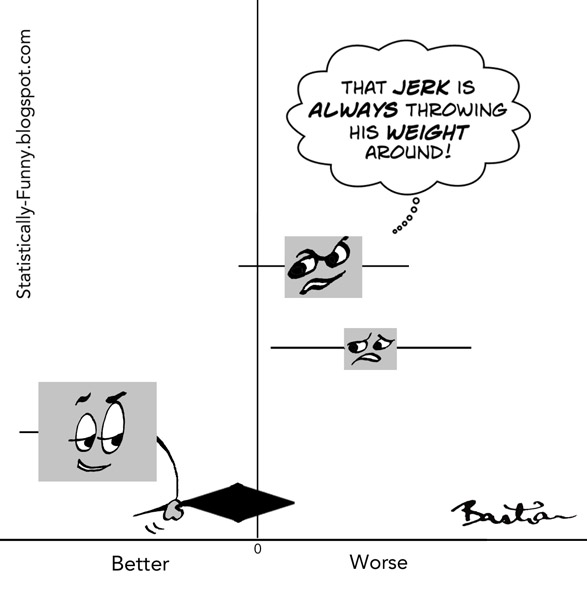

In any event, this new trial dwarfs all the others. Measured by manuscripts, it’s more than twice the size of all the previous trials combined. That means in any meta-analysis of a single outcome, the results from this new one would dominate, much like this:

However, only one of the large trials randomized consecutive manuscripts at a journal (Van Rooyen 1998), as this new one did. That was a trial of the editorial process at the BMJ, including 2 non-editor authors (one of whom was a statistician), and published in JAMA. Although this trial did better on conflict of interest and some other trial design features, it’s a 1998-style report – the available data and detail is flimsy, and the protocol isn’t publicly available. There’s a lot to critique there.

The results aren’t directly comparable, because in the BMJ trial, the editorial decision outcome was more clearcut and might be likely to correspond to eventual publication: Articles were accepted with or without revisions, or they were rejected. The power calculation in that trial was based on an outcome measure with more options than the 3-option editorial decision. In that trial, 42% of the peer reviewers accurately identified the authors. And there was a control group of people who weren’t informed they were in a trial, and in which names of authors and reviewers weren’t concealed.

The one trial we have that addressed the question of naming authors at more than one journal found there were important differences between the journals in how feasible it was to successfully conceal authors’ identity, for example.

The Fox trial is the only large one that showed a likely substantial effect of not naming authors, and most trials showed no effect. We don’t know enough to be sure about why the Fox trial differed. It’s the first non-medical journal to report a large randomized trial. We don’t know how the levels of editorial bias at these journals would compare to each other – or how similar it is at other journals that haven’t run trials. The context is going to vary a lot. That means we need quite a few good quality trials at a range of journals to get clarity on this question. We don’t have that. With only 3 trials in the last decade, and only 3 large trials at all, we’re not likely to have strong evidence any time soon.

~~~~

You can keep up with my work via my free newsletter, Living With Evidence.

The cartoons are my own (CC BY-NC-ND license). (More cartoons at Statistically Funny.)

Disclosures: I’ve had a variety of editorial roles at multiple journals across the years, including having been a member of the ethics committee of the BMJ, and being on the editorial board of PLOS Medicine for a time, and PLOS ONE‘s human ethics advisory group. I wrote a chapter of the second edition of the BMJ‘s book, Peer Review in Health Sciences. I have done research on post-publication peer review, subsequent to a previous role I had, as Editor-in-Chief of PubMed Commons (a discontinued post-publication commenting system for PubMed). I have been advising on some controversial issues for The Cochrane Library, a systematic review journal which I helped establish, and for which I was an editor for several years.